JavaScript scrapers: An Overview of Capabilities

JavaScript scrapers are an opportunity to create your own full-fledged scrapers with as complex logic as you want, using the JavaScript language. At the same time, JS scrapers can also use the full functionality of standard scrapers.

Features

Using the full power of A-Parser, you can now write your own scraper/regger/poster with as complex logic as you want. JavaScript with ES6 features (v8 engine) is used for writing code.

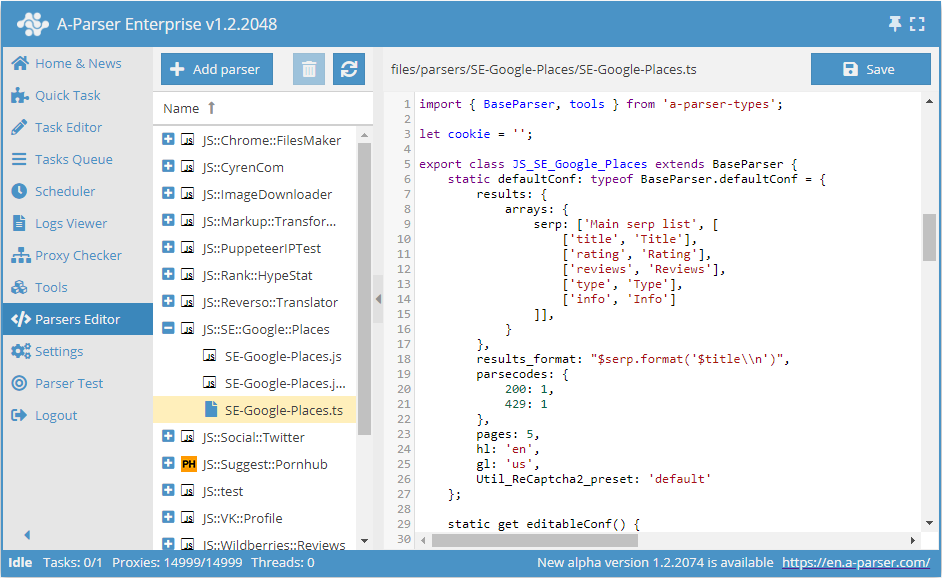

The code of scrapers is as concise as possible, allowing you to focus on writing logic; A-Parser takes care of multithreading, networking, proxies, results, logs, etc. You can write code directly in the scraper interface by adding a new scraper in the Parser Editor. You can also use external editors to write scrapers, such as VSCode.

Automatic versioning is used when saving scraper code through the built-in editor.

Working with JavaScript scrapers is available for Pro and Enterprise licenses

Access to the JS Scraper Editor

If A-Parser is used remotely, for security reasons the JS Scraper Editor is not available by default. To grant access to it, you need to:

- Set a password in the Settings -> General Settings

- Add the following line to config/config.txt:

allow_javascript_editor: 1 - Restart A-Parser

Operating Instructions

In the Parser Editor, we create a new scraper and set the name of the scraper. By default, a simple example will be loaded, based on which you can quickly start creating your own scraper.

If an external editor is used to write code, you need to open the file of the scraper being edited in the /parsers/ folder. File structure of the installed program.

Once the code is ready, save it and use it as a regular scraper: in the Task Editor, select the created scraper, if necessary, you can set the required parameters, thread config, file name, etc.

You can edit the created scraper at any time. All changes related to the interface will appear after re-selecting the scraper from the list of scrapers or restarting A-Parser; changes in the scraper logic are applied when the task with the scraper is restarted.

By default, a standard icon is displayed for each created scraper, you can add your own in png or ico format by placing it in the scraper folder in /parsers/:

General Operating Principles

By default, an example of a simple scraper is created, ready for further editing.

- TypeScript

- JavaScript

import { BaseParser } from 'a-parser-types';

export class JS_v2_example extends BaseParser {

static defaultConf: typeof BaseParser.defaultConf = {

version: '0.0.1',

results: {

flat: [

['title', 'HTML title'],

]

},

max_size: 2 * 1024 * 1024,

parsecodes: {

200: 1,

},

results_format: '$query: $title\\n',

};

static editableConf: typeof BaseParser.editableConf = [];

async parse(set, results) {

this.logger.put("Start scraping query: " + set.query);

let response = await this.request('GET', set.query, {}, {

check_content: ['<\/html>'],

decode: 'auto-html',

});

if (response.success) {

let matches = response.data.match(/<title>(.*?)<\/title>/i);

if (matches)

results.title = matches[1];

}

results.success = response.success;

return results;

}

}

const { BaseParser } = require("a-parser-types");

class JS_v2_example_js extends BaseParser {

static defaultConf = {

version: '0.0.1',

results: {

flat: [

['title', 'HTML title'],

]

},

max_size: 2 * 1024 * 1024,

parsecodes: {

200: 1,

},

results_format: '$query: $title\\n',

};

static editableConf = [];

async parse(set, results) {

this.logger.put("Start scraping query: " + set.query);

let response = await this.request('GET', set.query, {}, {

check_content: ['<\/html>'],

decode: 'auto-html',

});

if (response.success) {

let matches = response.data.match(/<title>(.*?)<\/title>/i);

if (matches)

results.title = matches[1];

}

results.success = response.success;

return results;

}

}

The constructor is called once for each task. It is mandatory to set this.defaultConf.results and this.defaultConf.results_format, other fields are optional and will take default values.

The array this.editableConf defines which settings can be changed by the user from the A-Parser interface. You can use the following types of fields:

combobox- a drop-down selection menu. You can also make a selection menu for a preset of a standard scraper, for example:

['Util_AntiGate_preset', ['combobox', 'AntiGate preset']]

comboboxwith the ability to select multiple options. You need to additionally set the parameter{'multiSelect': 1}:

['proxyCheckers', ['combobox', 'Proxy Checkers', {'multiSelect': 1}, ['*', 'All']]]

checkbox- a checkbox for parameters that can have only 2 values (true/false)textfield- a text fieldtextarea- a multiline text field

The parse method is an asynchronous function, and for any blocking operation, it must return await (this is the main and only difference from a regular function). The method is called for each request that is being processed. It is mandatory to pass set (a hash with the request and its parameters) and results (an empty template for the results). It is also mandatory to return the filled results, having previously set the success flag.

Automatic Versioning

The version has the format Major.Minor.Revision

- TypeScript

- JavaScript

this.defaultConf: typeof BaseParser.defaultConf = {

version: '0.1.1',

...

}

this.defaultConf = {

version: '0.1.1',

...

}

The Revision value (the last digit) is automatically incremented with each save. The other values (Major, Minor) can be changed manually, and the Revision can be reset to 0.

If for some reason it is necessary to change the Revision only manually, then the version must be enclosed in double quotes ""

Bulk Query Processing

In some cases, it may be necessary to take several requests from the queue at once and process them together. For example, this functionality is used in  SE::Yandex::Direct::Frequency: when scraping with accounts, data is collected in batches of 10 requests.

SE::Yandex::Direct::Frequency: when scraping with accounts, data is collected in batches of 10 requests.

To implement the same functionality in a JS scraper, you need to set the value bulkQueries: N in this.defaultConf, where N is the required number of requests in a batch. In this case, the scraper will take requests in batches of N pieces, and all requests of the current iteration will be contained in the array set.bulkQueries (including all standard variables: query.first, query.orig, query.prev, etc.). Below is an example of such an array:

[

{

"first": "test",

"prev": "",

"lvl": 0,

"num": 0,

"query": "test",

"queryUid": "6eb301",

"orig": "test"

},

{

"first": "проверка",

"prev": "",

"lvl": 0,

"num": 1,

"query": "проверка",

"queryUid": "774563",

"orig": "проверка"

},

{

"first": "third query",

"prev": "",

"lvl": 0,

"num": 2,

"query": "third query",

"queryUid": "2bc8ed",

"orig": "third query"

}

]

Results during bulk processing must be filled in the array results.bulkResults, where each element is a results object. The elements in results.bulkResults are arranged in the same order that was in set.bulkQueries.

Useful Links

📄️ BulkQueries example

Example of using bulkQueries with a call to a built-in scraper

🔗 Examples and discussion

Forum topic with examples and discussion of JS scraper functionality

🔗 JS scraper catalog

Section in the resource catalog dedicated to JS scrapers

🔗 Overview of basic ES6 features

Article on habrahabr dedicated to an overview of basic ES6 features