HTTP requests (+working with cookies, proxies, sessions)

Base Class Methods

To collect data from a web page, you need to execute an HTTP request. The JavaScript API v2 of A-Parser implements an easy-to-

use of an HTTP request execution method that returns an JSON object depending on the specified

method arguments. Below you will learn: how an HTTP request is made, what arguments and options the method has, the results of

the specified options, how to set the success condition for an HTTP request, and more.

Also described are methods that allow easy manipulation of cookies, proxy, and session in the created scraper. After successful HTTP request execution, or before execution, you can set/change proxy/cookie/session data for execution HTTP requests or save them for execution by another thread using the Session Manager.

These methods are inherited from BaseParser and form the basis for creating custom scrapers.

await this.request(method, url[, queryParams][, opts])

await this.request(method, url, queryParams, opts)

Getting the HTTP response to a request, with the following arguments specified:

method- request method (GET, POST...)url- the link for the requestqueryParams- a hash with GET parameters or a hash with the POST request bodyopts- a hash with request options

opts.check_content

check_content: [ condition1, condition2, ...] - an array of conditions to check the received content; if the check

fails, the request will be repeated with a different proxy.

Capabilities:

- using strings as conditions (searching for string inclusion)

- using regular expressions as conditions

- using custom check functions to which the response data and headers are passed

- several different types of conditions can be specified at once

- for logical negation, enclose the condition in an array, i.e.,

check_content: ['xxxx', [/yyyy/]]means the request will be considered successful if the received data contains the substringxxxxand the regular expression/yyyy/does not find matches on the page

All checks specified in the array must pass for the request to be successful.

Example (comments indicate what is needed for the request to be considered successful):

let response = await this.request('GET', set.query, {}, {

check_content: [

/<\/html>|<\/body>/, // this regular expression must match on the received page

['XXXX'], // this substring must not be present on the received page

'</html>', // this substring must be present on the received page

(data, hdr) => {

return hdr.Status == 200 && data.length > 100;

} // this function must return true

]

});

opts.decode

decode: 'auto-html' - automatic detection of encoding and conversion to utf8

Possible values:

auto-html- based on headers, meta tags, and page content (optimal recommended option)utf8- indicates that the document is in utf8 encoding<encoding>- any other encoding

opts.headers

headers: { ... } - hash with headers, header name is set in lower case, you can include cookie.

Example:

headers: {

accept: 'image/avif,image/webp,image/apng,image/svg+xml,image/*,*/*;q=0.8',

'accept-encoding': 'gzip, deflate, br',

cookie: 'a=321; b=test',

'user-agent' 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/88.0.4324.150 Safari/537.36'

}

opts.headers_order

headers_order: ['cookie', 'user-agent', ...] - allows overriding the header sorting order

opts.onlyheaders

onlyheaders: 0 - determines reading data, if enabled (1), only headers are received

opts.recurse

recurse: N - maximum number of redirect hops, defaults to 7, use 0 to disable redirection

redirects

opts.proxyretries

proxyretries: N - number of request attempts, defaults to the value from the scraper settings

opts.parsecodes

parsecodes: { ... } - list of HTTP response codes that the scraper will consider successful, defaults to the value from the

scraper settings. If you specify '*': 1, all responses will be considered successful.

Example:

parsecodes: {

200: 1,

403: 1,

500: 1

}

opts.timeout

timeout: N - response timeout in seconds, defaults to the value from the scraper settings

opts.do_gzip

do_gzip: 1 - determines whether to use compression (gzip/deflate/br), defaults to enabled (1), to disable

you need to set the value to 0

opts.max_size

max_size: N - maximum response size in bytes, defaults to the value from the scraper settings

opts.cookie_jar

cookie_jar: { ... } - a hash with cookies. Example of a hash:

"cookie_jar": {

"version": 1,

".google.com": {

"/": {

"login": {

"value": "true"

},

"lang": {

"value": "ru-RU"

}

}

},

".test.google.com": {

"/": {

"id": {

"value": 155643

}

}

}

opts.attempt

attempt: N - indicates the current attempt number; when using this parameter, the built-in attempt handler for this

of this request is ignored

opts.browser

browser: 1 - automatic emulation of browser headers (1 - enabled, 0 - disabled)

opts.use_proxy

use_proxy: 1 - overrides the use of a proxy for a single request inside the JS scraper on top of the global

parameter Use proxy (1 - enabled, 0 - disabled)

opts.noextraquery

noextraquery: 0 - disables adding Extra query string to the request URL (1 - enabled, 0 - disabled)

opts.save_to_file

save_to_file: file - allows downloading the file directly to disk, bypassing memory storage. Instead of file specify the name and

path under which to save the file. When using this option, everything related to data (content validation

in opts.check_content will not be performed, response.data will be empty, etc.)

opts.bypass_cloudflare

bypass_cloudflare: 0 - automatic bypass of CloudFlare JavaScript protection using Chrome browser (1 - enabled, 0 -

disabled)

Chrome Headless control in this case is managed by the scraper settings bypassCloudFlareChromeMaxPages

and bypassCloudFlareChromeHeadless, which must be specified in static defaultConf and static editableConf:

static defaultConf: typeof BaseParser.defaultConf = {

version: '0.0.1',

results: {

flat: [

['title', 'Title'],

]

},

max_size: 2 * 1024 * 1024,

parsecodes: {

200: 1,

},

results_format: "$title\n",

bypass_cloudflare: 1,

bypassCloudFlareChromeMaxPages: 20,

bypassCloudFlareChromeHeadless: 0

};

static editableConf: typeof BaseParser.editableConf = [

['bypass_cloudflare', ['textfield', 'bypass_cloudflare']],

['bypassCloudFlareChromeMaxPages', ['textfield', 'bypassCloudFlareChromeMaxPages']],

['bypassCloudFlareChromeHeadless', ['textfield', 'bypassCloudFlareChromeHeadless']],

];

async parse(set, results) {

const {success, data, headers} = await this.request('GET', set.query, {}, {

bypass_cloudflare: this.conf.bypass_cloudflare

});

return results;

}

opts.follow_meta_refresh

follow_meta_refresh: 0 - allows following redirects declared via an HTML meta tag:

<meta http-equiv="refresh" content="time; url=..."/>

opts.redirect_filter

redirect_filter: (hdr) => 1 | 0 - allows setting a function to filter redirect transitions; if the function

returns 1, the scraper will follow the redirect (considering the parameter opts.recurse), ; if it returns 0 the redirect

redirects should stop:

redirect_filter: (hdr) => {

if (hdr.location.match(/login/))

return 1;

return 0;

}

opts.follow_common_rediects

opts.follow_common_rediects: 0 - determines whether to follow standard redirects (e.g. http -> https

and/or www.domain.com -> domain.com), if you specify 1 the scraper will follow standard redirects without considering

parameter opts.recurse

opts.http2

opts.http2: 0 - determines whether to use the HTTP/2 protocol when executing requests, defaults to

HTTP/1.1 is used

opts.randomize_tls_fingerprint

opts.randomize_tls_fingerprint: 0 - this option allows bypassing site bans based on TLS fingerprint (1 - enabled, 0 -

disabled)

opts.tlsOpts

tlsOpts: { ... } – allows

passing settings for

https connections

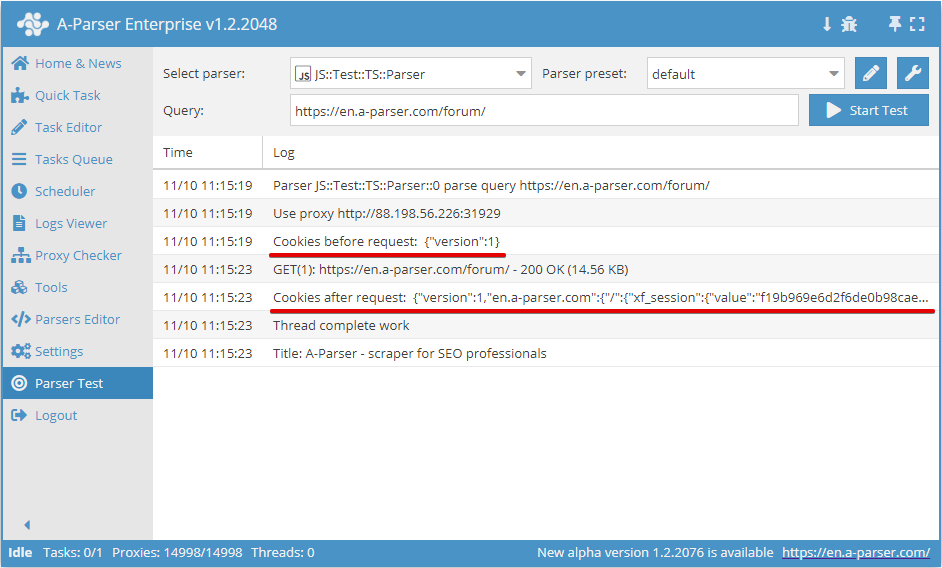

await this.cookies.*

Working with cookies for the current request

.getAll()

Getting the array of cookies

await this.cookies.getAll();

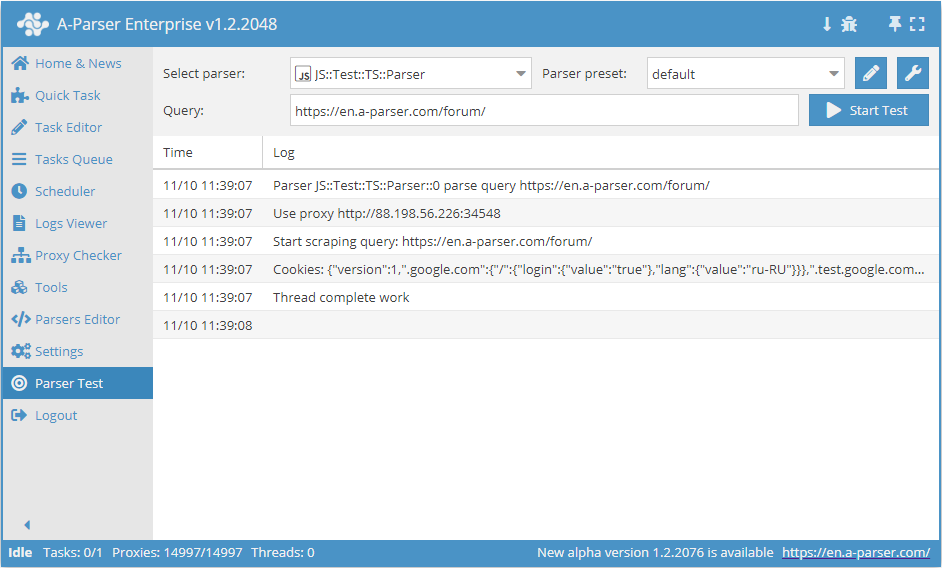

.setAll(cookie_jar)

Setting cookies; a hash with cookies must be passed as an argument

async parse(set, results) {

this.logger.put("Start scraping query: " + set.query);

await this.cookies.setAll({

"version": 1,

".google.com": {

"/": {

"login": {

"value": "true"

},

"lang": {

"value": "ru-RU"

}

}

},

".test.google.com": {

"/": {

"id": {

"value": 155643

}

}

}

});

let cookies = await this.cookies.getAll();

this.logger.put("Cookies: " + JSON.stringify(cookies));

results.SKIP = 1;

return results;

}

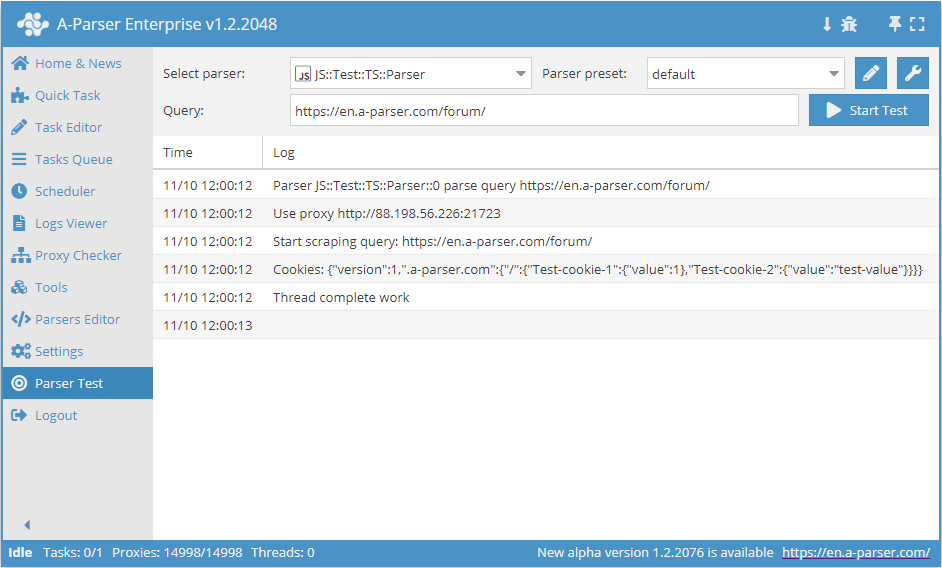

.set(host, path, name, value)

await this.cookies.set(host, path, name, value) - setting a single cookie

async parse(set, results) {

this.logger.put("Start scraping query: " + set.query);

await this.cookies.set('.a-parser.com', '/', 'Test-cookie-1', 1);

await this.cookies.set('.a-parser.com', '/', 'Test-cookie-2', 'test-value');

let cookies = await this.cookies.getAll();

this.logger.put("Cookies: " + JSON.stringify(cookies));

results.SKIP = 1;

return results;

}

await this.proxy.*

Working with proxies

.next()

Change the proxy to the next one; the old proxy will no longer be used for the current request

.ban()

Change and ban the proxy (must be used when the service blocks operation by IP); the proxy will be banned for the duration

specified in the scraper settings (proxybannedcleanup)

.get()

Get the current proxy (the last proxy used for the request)

.set(proxy, noChange?)

await this.proxy.set('http://127.0.0.1:8080', true) - set a proxy for the next request. The noChange parameter is optional; if true is set, the proxy will not change between attempts. Default is noChange = false

await this.sessionManager.*

Methods for working with sessions. Each session must store the proxy and cookies used. Arbitrary data can also be saved.

To use sessions in a JS scraper, you must first initialize the Session Manager. This is done using the await this.sessionManagerinit() method in init()

.init(opts?)

Session Manager initialization. An object (opts) with additional parameters can be passed as an argument (all parameters are optional):

name- allows overriding the name of the scraper to which the sessions belong, by default equal to the name of the scraper where initialization occurswaitForSession- tells the scraper to wait for a session until it appears (this is only relevant when multiple jobs are running, e.g., one generates sessions, the other uses them), i.e.,.get()and.reset()will always wait for a sessiondomain- tells to look for sessions among all saved for this scraper (if the value is not set), or only for a specific domain (must specify the domain with a dot in front, e.g.,.site.com)sessionsKey- allows manually setting the session storage name; if not set, the name is automatically generated based onname(or the scraper name (ifnameis not set), domain, and proxy checkerexpire- sets the session lifetime in minutes, unlimited by default

Example usage:

async init() {

await this.sessionManager.init({

name: 'JS::test',

expire: 15 * 60

});

}

.get(opts?)

Getting a new session, must be called before making a request (before the first attempt). Returns an object with arbitrary data saved in the session. An object (opts) with additional parameters can be passed as an argument (all parameters are optional):

waitTimeout- option to specify how many minutes to wait for a session to appear, works independently of thewaitForSessionparameter in.init()( and ignores it); an empty session will be used after expirationtag- getting a session with a specified tag, you can use the domain name, for example, to link sessions to the domains they were obtained from

Example usage:

await this.sessionManager.get({

waitTimeout: 10,

tag: 'test session'

})

.reset(opts?)

Clear cookies and get a new session. Must be used if the request with the current session was unsuccessful. Returns an object with arbitrary data saved in the session. An object (opts) with additional parameters can be passed as an argument (all parameters are optional):

waitTimeout- option to specify how many minutes to wait for a session to appear, works independently of thewaitForSessionparameter in.init()( and ignores it); an empty session will be used after expirationtag- getting a session with a specified tag, you can use the domain name, for example, to link sessions to the domains they were obtained from

Example usage:

await this.sessionManager.reset({

waitTimeout: 5,

tag: 'test session'

})

.save(sessionOpts?, saveOpts?)

Saving a successful session with the ability to store arbitrary data in the session. Supports 2 optional arguments:

sessionOpts- arbitrary data to store in the session, can be a number, string, array, or objectsaveOpts- an object with session saving parameters:multiply- optional parameter, allows multiplying the session, the value must be a numbertag- optional parameter, sets a tag for the saved session, you can use the domain name, for example, to link sessions to the domains they were obtained from

Example usage:

await this.sessionManager.save('some data here', {

multiply: 3,

tag: 'test session'

})

.count()

Returns the number of sessions for the current Session Manager

Example usage:

let sesCount = await this.sessionManager.count();

.removeById(sessionId)

Deletes all sessions with the specified id. Returns the number of deleted sessions. The current session id is contained in the this.sessionId variable

Example usage:

const removedCount = await this.sessionManager.removeById(this.sessionId);

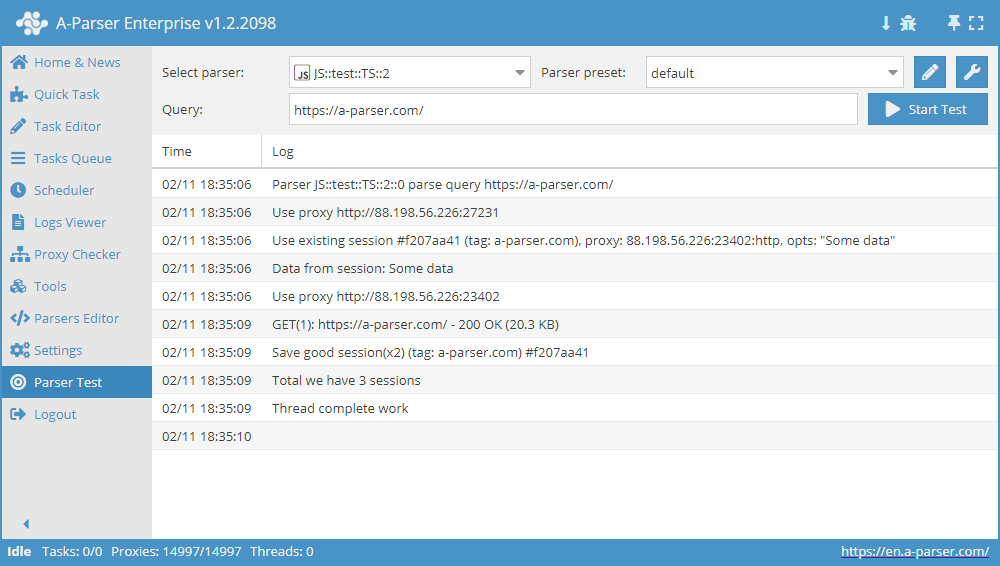

Comprehensive example of using the Session Manager

async init() {

await this.sessionManager.init({

expire: 15 * 60

});

}

async parse(set, results) {

let ses = await this.sessionManager.get();

for(let attempt = 1; attempt <= this.conf.proxyretries; attempt++) {

if(ses)

this.logger.put('Data from session:', ses);

const { success, data } = await this.request('GET', set.query, {}, { attempt });

if(success) {

// process data here

results.success = 1;

break;

} else if(attempt < this.conf.proxyretries) {

const removedCount = await this.sessionManager.removeById(this.sessionId);

this.logger.put(`Removed ${removedCount} bad sessions with id #${this.sessionId}`);

ses = await this.sessionManager.reset();

}

}

if(results.success) {

await this.sessionManager.save('Some data', { multiply: 2 });

this.logger.put(`Total we have ${await this.sessionManager.count()} sessions`);

}

return results;

}

Request Methods await this.request

GET Method

Request parameters can be passed directly in the query string https://a-parser.com/users/?type=staff:

const { success, data, headers } = await this.request('GET', 'https://a-parser.com/users/?type=staff');

Or as an object in queryParams, where key: value is equal to param=value:

const { success, data, headers } = await this.request('GET', 'https://a-parser.com/users/', {

type: 'staff'

});

POST Method

If the POST, method is used, the request body can be passed in two ways:

List variable names and their values in

queryParams, for example:{

"key": set.query,

"id": 1234,

"type": "text"

}List them in

opts.body, for example:body: 'key=' + set.query + '&id=1234&type=text'

If request body is passed as an object, it is automatically converted into the form-urlencoded, format. Also, if body is specified and the

header is not content-type specified, content-type: application/x-www-form-urlencoded:

const { success, data, headers } = await this.request('POST', 'https://jsonplaceholder.typicode.com/posts', {

title: 'foo,',

body: 'bar',

userId: 1

});

If the POST request body is a string or a buffer, it is passed as is:

// request with string

const string = 'title=foo&body=bar&userId=1';

const { success, data, headers } = await this.request('POST', 'https://jsonplaceholder.typicode.com/posts', {}, {

body: string

});

// request with buffer

const string = 'title=foo&body=bar&userId=1';

const buf = Buffer.from(string, 'utf8');

const { success, data, headers } = await this.request('POST', 'https://jsonplaceholder.typicode.com/posts', {}, {

body: buf

});

Uploading Files

Sending a file with a POST request using the form-data module:

const file = fs.readFileSync('pathToFile');

const FormData = require('form-data');

const format = new FormData();

format.append('file', file, 'fileName.ext');

const { success, data, headers } = await this.request('POST', 'https://file.io', {}, {

headers: format.getHeaders(),

body: format.getBuffer()

});

Example of sending a file in a POST request with content type multipart/form-data:

const EOL = '\r\n';

const file = fs.readFileSync('pathToFile');

const boundary = '----WebKitFormBoundary' + String(Math.random()).slice(2);

const requestHeaders = {

'content-type': 'multipart/form-data; boundary=' + boundary

};

const body = '--'

+ boundary

+ EOL

+ 'Content-Disposition: form-data; name="file"; filename="fileName.ext"'

+ EOL

+ 'Content-Type: text/html'

+ EOL

+ EOL

+ file

+ EOL

+ '--'

+ boundary

+ '--';

const { success, data, headers } = await this.request('POST', 'https://file.io', {}, {

headers: requestHeaders,

body

});