General Information

A-Parser - a scraper for professionals

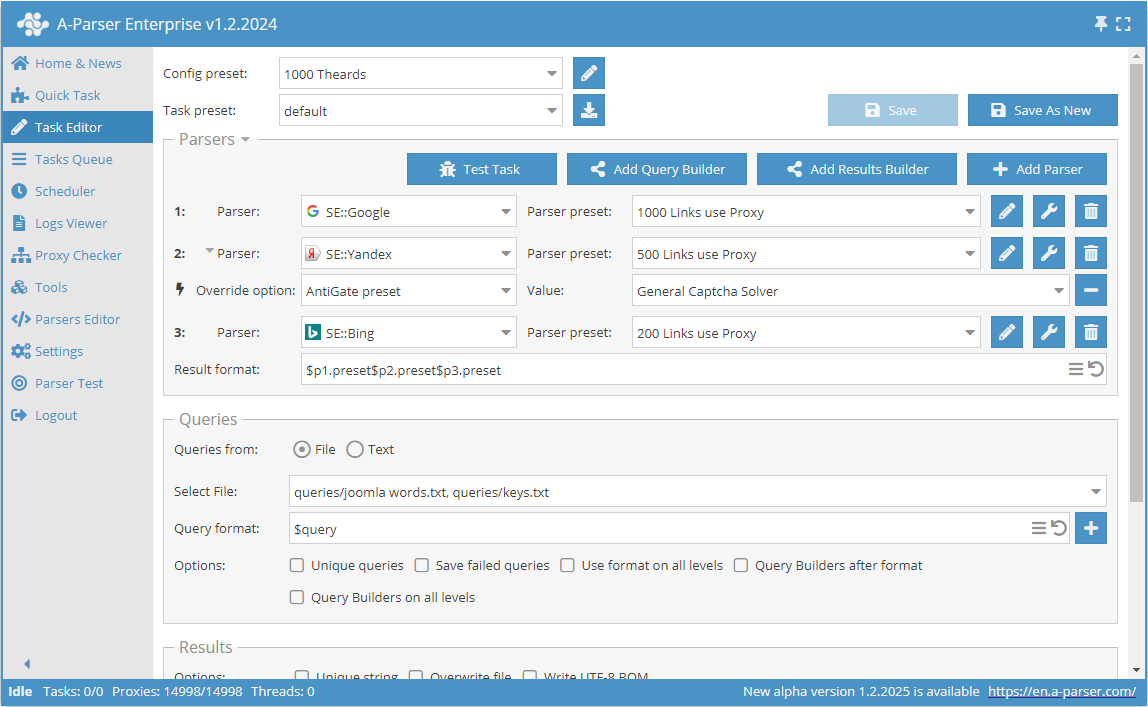

A-Parser - a multi-threaded scraper for search engines, website evaluation services, keywords, content (text, links, arbitrary data) and other various services (youtube, images, translator...), A-Parser contains over 90 built-in scrapers.

Key features of A-Parser include support for Windows/Linux platforms, a web interface with remote access capability, the ability to create your own scrapers without writing code, as well as the ability to create scrapers with complex logic in JavaScript / TypeScript with support for NodeJS modules.

Performance, proxy handling, bypassing CloudFlare protection, fast HTTP engine, management of Chrome via puppeteer, scraper management via API and much more make A-Parser a unique solution, in this documentation we will try to reveal all the advantages of A-Parser and ways to use it.

Areas of Use

A-Parser is capable of solving a multitude of tasks, for convenience we have divided them into categories by application areas, follow the links below for details

SEO specialists and studios

Business and freelancers

Developers

Marketers

E-commerce and marketplaces

Arbitrage specialists

Features and Advantages

In this section, we briefly listed the main advantages of A-Parser, more detailed information can be found at the link below

Overview of all features

⏩ A-Parser Webinar: Overview and Q&A

Multithreading and Performance

- A-Parser operates on the latest versions of NodeJS and the JavaScript engine V8

- AsyncHTTPX - proprietary implementation of the HTTP engine with support for HTTP/1.1 and HTTP/2, HTTPS/TLS, proxy support HTTP/SOCKS4/SOCKS5 with optional authorization

- The scraper is capable of performing HTTP requests in almost unlimited number of concurrent threads depending on the computer configuration and the task at hand

- Each task (set of requests) is parsed in the specified number of threads

- When using multiple scrapers in one task, each request to different scrapers is executed in different threads simultaneously

- The scraper is able to run several tasks in parallel

- Checking and downloading proxies from sources also occurs in multi-threaded mode