General Information

A-Parser - Scraper for Professionals

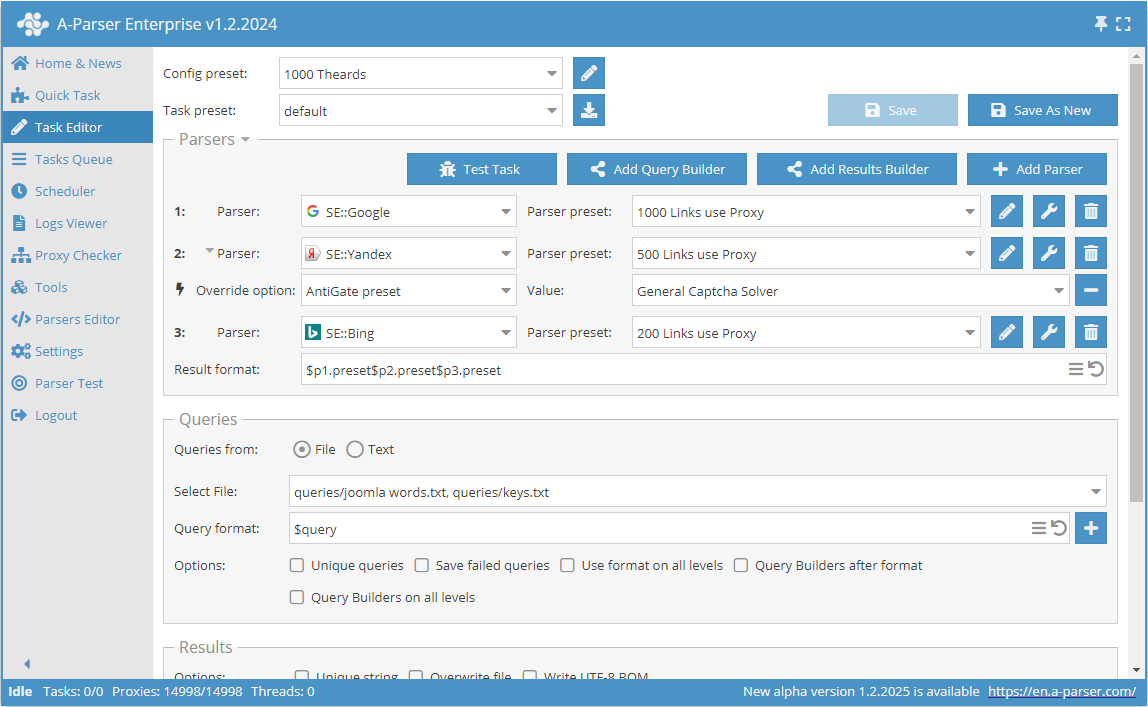

A-Parser - a multithreaded scraper for search engines, website evaluation services, keywords, content (text, links, arbitrary data), and various other services (youtube, images, translator...), A-Parser contains more than 90 built-in scrapers.

Key features of A-Parser are support for platforms, Windows/Linux, a web interface with remote access capabilities, the ability to create your own custom scrapers without writing code,, as well as the ability to create scrapers with complex logic in JavaScript / TypeScript with support for NodeJS modules.

Performance, proxy handling, bypassing protection, CloudFlare, a fast HTTP engine, support for controlling Chrome via puppeteer, , controlling the scraper via API , and much more make A-Parser a unique solution. In this documentation, we will try to reveal all the advantages of A-Parser and its usage methods.

Areas of Use

A-Parser is capable of solving many tasks. For convenience, we have categorized them by area of application. Follow the links below for details

Integrations with AI services

SEO specialists and agencies

Business and freelancers

Developers

Marketers

Online stores and marketplaces

Affiliate marketers

Features and Benefits

In this section, we have briefly listed the main advantages of A-Parser, . More detailed information can be found via the link below

Overview of all features

⏩ A-Parser Webinar: Overview and Q&A

Multithreading and Performance

- A-Parser runs on the latest versions of NodeJS and the V8 JavaScript engine

- AsyncHTTPX - proprietary implementation of an HTTP engine with support for HTTP/1.1 and HTTP/2, HTTPS/TLS, support for HTTP/SOCKS4/SOCKS5 proxies with optional authentication

- The scraper can execute HTTP requests in a near-unlimited number of simultaneous threads, depending on the computer's configuration and the task at hand

- Each task (set of requests) is scraped using the specified number of threads

- When using multiple scrapers in one task, each request to different scrapers is executed simultaneously in different threads

- The scraper can run multiple tasks in parallel

- Checking and loading proxies from sources also runs in multithreaded mode

Creating Your Own Scrapers

- Ability to create scrapers without writing code

- Use of regular expressions

- Support for multi-page scraping

- Checking content and the presence of the next page

- User-agent replacement and the ability to randomly change it with every request

- Nested scraping - the ability to substitute received results into requests

- Full-fledged work with JSON: parsing and formatting

- Ability to add and use your own JS functions to process received results directly within the scraper

Creating Scrapers in JavaScript

- Rich built-in API based on async/await

- Support for TypeScript

- Ability to connect any NodeJS modules

- Controlling Chrome/Chromium via puppeteer with support for separate proxies for each tab

Powerful Tools for Query and Result Generation

- Query Builder and Results Builder - allow modifying data (search and replace, extracting domain from a link, transformations using regular expressions, XPath...)

- Substitutions for queries - from a file; iteration over words, characters, and numbers, including with a specified step

- Result filtering - by substring inclusion, equality, greater/less than

- Result uniqueness - by string, by domain, by main domain (A-Parser knows all top-level domains, including those like co.uk, msk.ru)

- A powerful result templating engine based on Template Toolkit - allows outputting results in any convenient format (text, CSV, HTML, XML, arbitrary format)

- The scraper uses a preset system — you can create many predefined settings for various situations for each scraper

- Everything can be configured — no frameworks or limitations

- Exporting and importing settings allows for easy knowledge sharing with other users