Template Engine Tools (tools)

The Template Toolkit templater has a global variable $tools, which stores a set of tools available in any template and inside JS scrapers.

There is also a variable $tools.error, which contains error descriptions, if any occur during the operation of all tools.

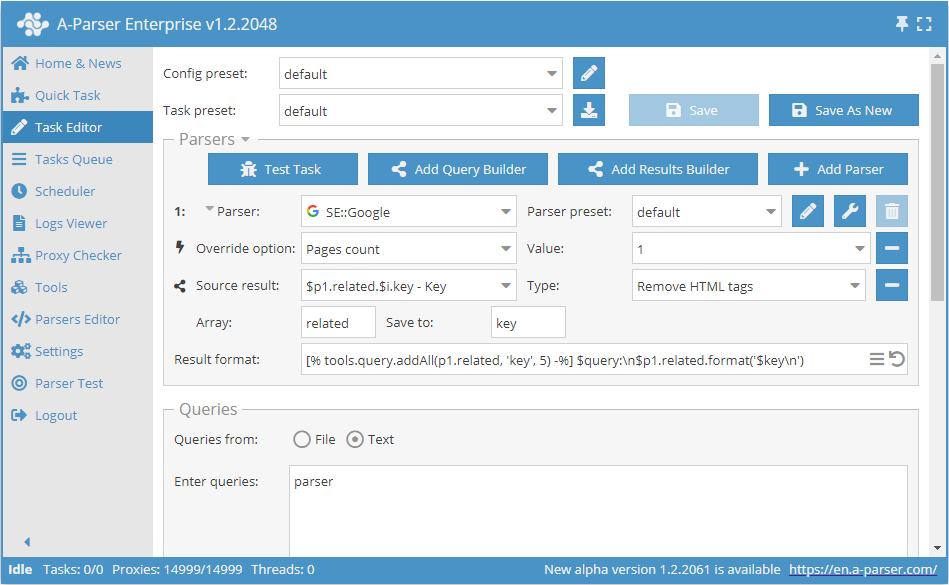

Adding requests $tools.query.*

This tool allows you to add requests to existing ones right during the job execution, forming them based on already scraped results. It can be used as an analogue to the Parse to level function in scrapers where it is not implemented. There are 2 methods:

[% tools.query.add(query, maxLevel) %]- adds a single request[% tools.query.addAll(array, item, maxLevel) %]- adds an array of requests

The parameter maxLevel specifies up to which level to add the requests, and is optional: if omitted, the scraper will effectively continue adding new requests as long as there are any. It is also recommended to enable the option Unique requests, to avoid looping and excessive work for the scraper.

It is possible to specify an arbitrary level for sub-requests. This can be used to distribute logic, i.e., where each level represents a separate functionality.

Example:

[% tools.query.add({query => query, lvl => 1}) %]- adds a request at a specific level.

Example for JS:

this.query.add({

query: "some query",

lvl: 1,

})

Result of the preset in the screenshot:

scraper:

parser

what is parsing in programming

parsing in compiler

compiler and parser development

what is syntax analysis

difference between lexical analysis and syntax analysis

syntax analyzer

parser programming language

parser:

parser definition

xml parser

parser generator

parser swtor

parser c++

ffxiv parser

html parser

parser java

what is parsing in programming:

parse wikipedia

parser compiler

what is a parser

parsing programming languages

definition of parser

parsing c++

parser define

parsing java

html parser:

online html parser

html parser php

html parser java

...

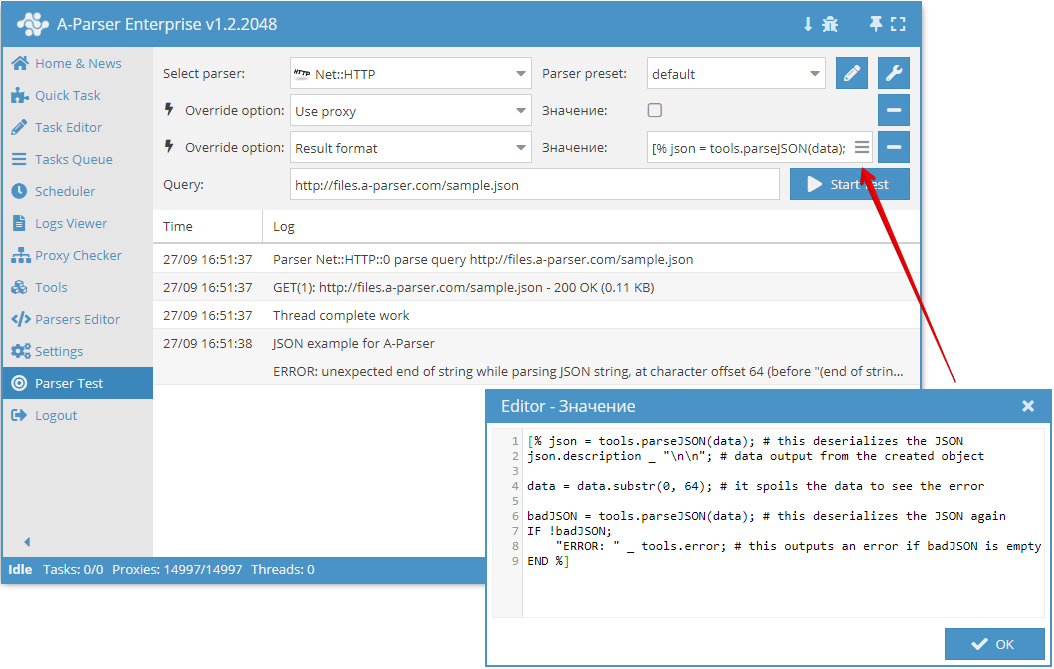

Parsing JSON structures $tools.parseJSON()

This tool allows you to deserialize JSON formatted data into variables (object) accessible in the templater. Example usage:

[% tools.parseJSON(data) %]

After deserialization, keys from the resulting object can be accessed like regular variables and arrays.

If the argument is an invalid JSON string, the scraper will record an error in $tools.error.

Output to CSV $tools.CSVline

This tool automatically formats values to CSV format and adds a newline, so in the result format, it is enough to list the necessary variables, and the output will be a valid CSV file, ready for import into Google Docs / Excel / and so on.

Example usage:

[% tools.CSVline(query, p1.serp.0.link, p2.title) %]

Video using $tools.CSVline():

Working with SQLite DB $tools.sqlite.*

This tool allows for easy and full-featured work with SQLite databases. There are three methods:

$tools.sqlite.get()- a method that allows you to retrieve a single piece of information from the database using SELECT, for example:

[% res = tools.sqlite.get('results/test.sqlite', 'SELECT COUNT(*) AS count FROM test') %]

$tools.sqlite.run()- a method that allows you to perform operations on the database (INSERT, DROP, etc.), for example:

[% res = tools.sqlite.run('results/test.sqlite', 'INSERT INTO test VALUES(?)', 'test') %]

$tools.sqlite.all()- a method that allows you to output all data from a table, for example:

[% res = tools.sqlite.get('results/test.sqlite', 'SELECT * FROM test') %]

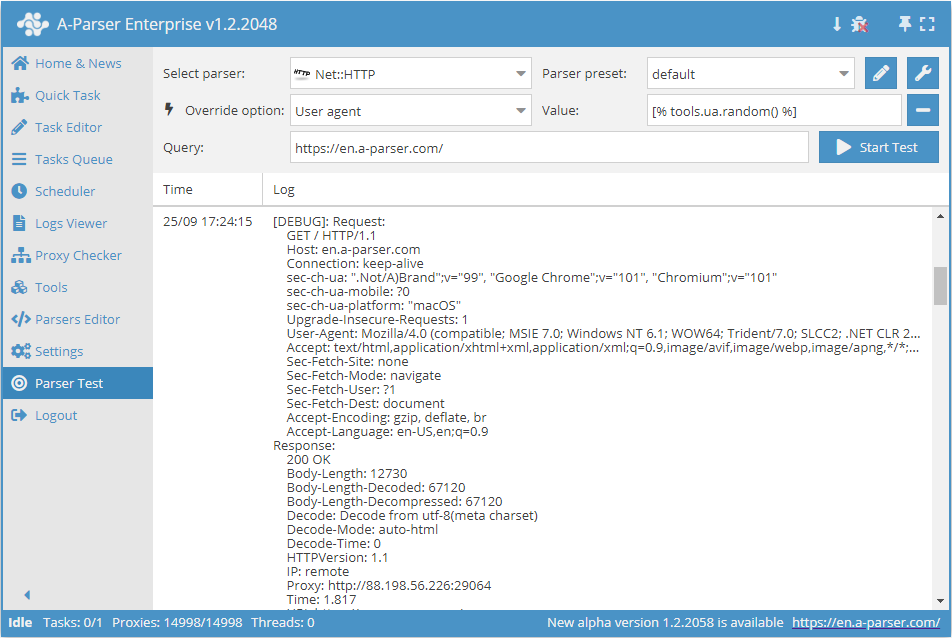

User-agent substitution $tools.ua.*

This tool is intended for replacing the user-agent in scrapers that use it (for example,  Net::HTTP). There are two methods:

Net::HTTP). There are two methods:

$tools.ua.list()- contains the full list of available user agents.$tools.ua.random()- outputs a random available user agent.

Example usage:

CThe list of all user-agents is stored in the file files/tools/user-agents.txt, which can be edited if necessary.

When using this tool for the parameter User agent in scrapers, you must explicitly specify it:

[% tools.ua.random() %]

JS support in tools $tools.js.*

This tool allows you to add your own JS functions and use them directly in the templater. Node.js modules are also supported. Functions are added in Tools -> JavaScript Editor

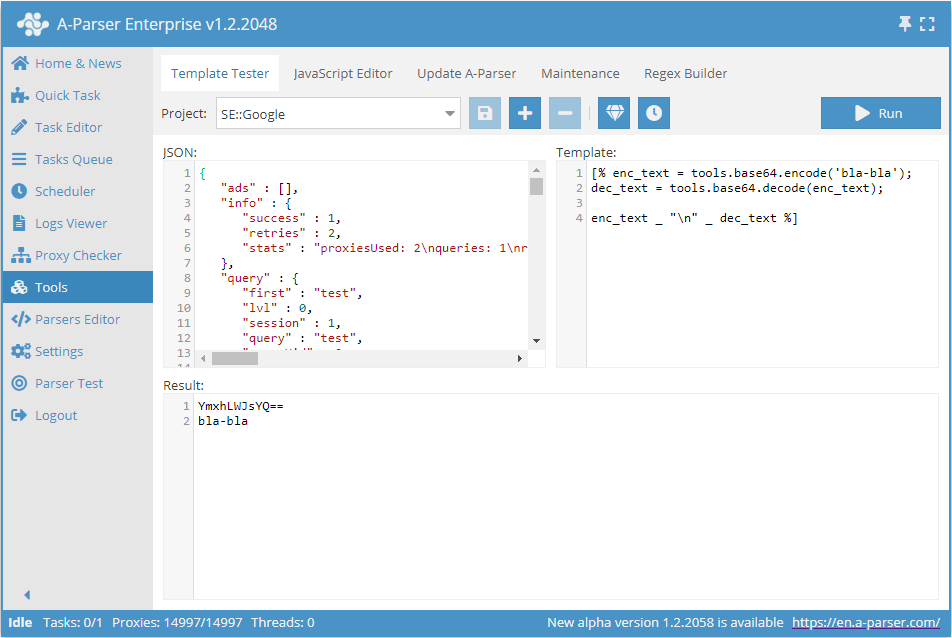

Working with base64 $tools.base64.*

This tool allows you to work with base64 directly in the scraper. This tool has 2 methods:

$tools.base64.encode()- encodes text to base64$tools.base64.decode()- decodes a base64 string to text

Example usage:

Data directory $tools.data.*

This tool is essentially an object that contains a large amount of pre-installed information - languages, regions, domains for search engines, etc. The complete list of elements (may change in the future):

"YandexWordStatRegions", "TopDomains", "CountryCodes", "YahooLocalDomains", "GoogleDomains", "BingTranslatorLangs", "Top1000Words", "GoogleLangs", "GoogleInterfaceLangs", "EnglishMonths", "GoogleTrendsCountries"

Each of these elements is an array or hash of data; you can view the contents by outputting the data, for example, in JSON:

[% tools.data.GoogleDomains.json() %]

In-memory data storage $tools.memory.*

Simple in-memory key/value storage, shared across all jobs, API requests, etc., reset when the scraper restarts. There are three methods:

[% tools.memory.set(key, value) %]- sets the valuevaluefor the keykey[% tools.memory.get(key) %]- returns the value corresponding to the keykey[% tools.memory.delete(key) %]- removes the entry with keykeyfrom memory

Getting A-Parser version information $tools.aparser.version()

This tool allows you to get information about the A-Parser version and output it in the result.

Example usage:

[% tools.aparser.version() %]

Getting job ID and thread count $tools.task.*

This tool allows you to get information about the job ID and display the thread count. There are two methods:

[% tools.task.id %]- returns the job id[% tools.task.threadsCount %]- returns the number of threads used in the job

Stopping a job $tools.task.stop()

This tool allows you to stop the job execution at any moment. It takes a string as an argument, which should contain the reason for stopping the job.

Example usage:

[% IF query.num == 3;

tools.task.stop('Stop after 3 queries');

END %]