Frequently Asked Questions

1. Questions related to demo, payment and purchase

1.1. How to download results in the Demo version?

In the Demo version, the results of the work are not available for download. We provide them upon your request. Send your queries and tell us which scraper you are interested in, and we will send you the results (their quantity is limited in the demo).

1.2. Do I need to pay extra for anything after purchasing A-Parser?

No. More details: licenses and add-ons, purchase page.

1.3. Where, how can I pay for proxies?

When purchasing a license, you receive bonus proxies.

Lite - 20 threads for 2 weeks, Pro and Enterprise - 50 threads for a month.

You can buy more threads or extend them in your Personal Account on the Store tab, Proxies sub-section.

1.4. Could you set up a task for me for money?

Technical support for issues related to A-Parser operation is provided free of charge. Regarding paid assistance in setting up tasks, you can contact: Paid services for task creation, assistance with setup, and training on using A-Parser.

1.5. Can I pay for the scraper via Privat24 bank? Via KIWI?

A list of payment systems we work with is available here: buy A-Parser.

1.6. If I only need to scrape the number of indexed pages on Yandex, which scraper should I buy?

The Lite version is enough for these purposes, but Pro is more practical and flexible.

1.7. Where can I see information about my license?

1.8. Is it possible to use purchased proxies from several IP addresses?

No.

2. Questions about installation, launch, and updates

2.1. Clicking the Download button - but the archive doesn't download. What to do?

Check if you have free space on your hard drive, disable your antivirus. Follow the installation instructions. Also check out How to start working.

2.2. I bought the Enterprise version, but PRO is still installing. What should I do?

Delete the previous version. In the Members Area, check if your IP address is correctly specified. Before downloading, click the Update button. Download the newer version. More details in the installation instructions.

2.3. I installed the program, but it won't start, what should I do?

Check running applications, disable antivirus, check available free RAM. Also, in your Personal Account check if your IP address is correctly specified. More details: installation instructions.

2.4. What to do if I have a dynamic IP address?

No problem, A-Parser supports working with dynamic IP addresses. You just need to update it in the Members Area every time it changes. To avoid these manipulations, it is recommended to use a static IP address.

2.5. What are the optimal parameters for a server or computer to install the scraper?

All system requirements can be viewed here: system requirements.

2.6. Started a task. The scraper crashed and won't start anymore, what to do?

You need to stop the server, check if the process is hanging in memory, and try to start again. You can also try to launch A-Parser with all tasks stopped. To do this, run it with the -stoptasks parameter. Details on running with the parameter.

2.7. What password to enter when opening 127.0.0.1:9091?

If this is the first launch, the password is empty. If not the first, it's the one you set. If you forgot the password - password reset.

2.8. I enter my IP in the Personal Account, but it doesn't change in the Your current IP field. Why?

The field Your current IP shows the IP that is currently valid for you, and it should not change. This is the IP you must enter in the IP 1.

2.9. Can I run two copies simultaneously?

You can run two copies on the same machine only if they have different ports specified in the configuration file.

You can run two A-Parsers on different machines simultaneously only if you have purchased an additional IP in your Personal Account.

2.10. Is the scraper tied to hardware?

No. Your IP is used for license control.

2.11. Question about updating - should I only update .exe? config/config.db and files/Rank-CMS/apps.json - what are these files for?

Unless otherwise specified, only update .exe. The first file is for storing the A-Parser configuration, and the second is the database for CMS detection and the actual operation of the scraper  Rank::CMS.

Rank::CMS.

2.12. I have Win Server 2008 Web Edition - the scraper doesn't start...

A-Parser will not work on this OS version. The only option is to change the OS.

2.13. I have a 4-core processor. Why does A-Parser use only one core?

A-Parser uses 2 to 4 cores, additional cores are only used for filtering, Results Builder, and Parse custom result

2.14. I started getting a segmentation fault (segmentation failed, segmentation error). What should I do?

Most likely your IP has changed. Check your Personal Account.

2.15. I have Linux. A-Parser started, but not opening in the browser. How to fix it?

Check your firewall - it's likely blocking access.

2.16. I have Windows 7. A-Parser started, but not opening in the browser, and there is no Node.js process in the task manager. How to fix it?

You need to check for Windows updates and install the latest available ones. Specifically, Windows 7 SP1 update is needed.

2.17. A-Parser does not start and the aparser.log shows the error FATAL: padding_depad failed: Invalid argument provided. at ./Crypt/Mode/CBC.pm line 20.

Most likely there is a problem with some task (the folder /config/tasks/), due to a disk error (for example, if the PC power was turned off without a correct shutdown), you can find out more if you launch A-Parser with the flag -morelogs

Solution: launch A-Parser with the parameter -stoptasks. If that didn't help, clean the entire /config/tasks/. If the problem persists after this, install the scraper again in a new directory and use the config file from the old one (if it is not damaged).

3. Questions about A-Parser and other general settings

3.1. How to configure the proxy checker?

Detailed instructions are here: proxy settings.

3.2. No live proxies - why?

Check your internet connection, as well as the proxy checker settings. If everything is done correctly, it means that your proxy list does not contain working servers at the moment. The solution to this problem: either use other proxies or try again later. If you are using our proxies, check the IP address in your Personal Account in the Proxies. section. It is also possible that your provider is blocking access to other DNS, try the steps described here: http://a-parser.com/threads/1240/#post-3582

3.3. How to connect anti-captcha?

Detailed instructions on setting up anti-captcha are here.

3.4. I changed the parameters in the scraper settings, but they were not applied. Why?

The default preset cannot be changed; if any changes are made, you must click Save as new preset, and then use it in your task.

3.5. Can I change the settings of a running task?

You can, but not all of them. In a running task, you can click pause and select Edit.

3.6. How to import a preset?

Click the button next to the task selection field in the Task Editor. Details here.

3.7. How to configure the scraper so that it doesn't use proxies?

In the settings of the desired scraper, uncheck the Use proxy box.

3.8. I don't have the Add override / Override option button!

This option can be added directly in the Task Editor. Scraper options.

3.9. How to overwrite the same results file?

When creating a task, set the Overwrite file option.

3.10. Where do I change the scraper password?

3.11. I set 6 million keywords for scraping, and also specified that all domains should be unique. How can I ensure that when I set new 6 million keywords, only unique domains that do not overlap with the previous scraping are recorded?

You need to use the option Save uniqueness when creating the first task, and specify the saved database in the second. Details in Additional options of the Task Editor.

3.12. How to bypass the 1000 results limit for Google?

Use the option Parse all results.

3.13. How to bypass the 1024 threads limit on Linux?

3.14. What is the thread limit on Windows?

Up to 10000 threads.

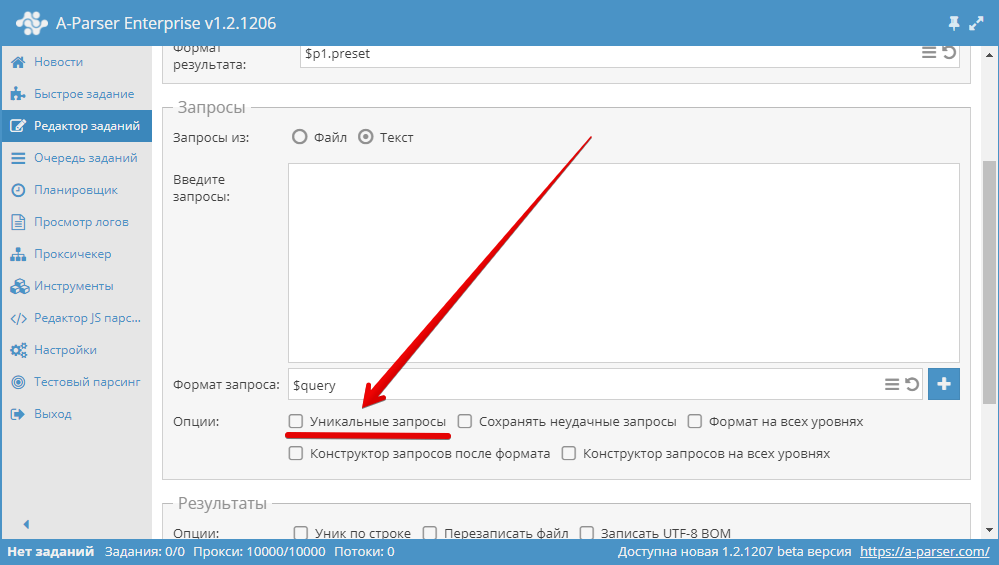

3.15. How to make queries unique?

Use the Unique queries option in the Queries block in the Task Editor.

3.16. How to disable proxy checking?

In Settings - Proxy Checker Settings, select the desired proxy checker and check the Do not check proxy box. Save and select the saved preset.

3.17. What is Proxy ban time? Can I set it to 0?

Proxy ban time in seconds. Yes, you can set it to 0.

3.18. What is the difference between Exact Domain and Top Level Domain in the  SE::Google::Position

SE::Google::Position

Exact Domain - is a strict match, i.e., if the search result shows www.domain.com, and we are looking for domain.com, there will be no match. Top Level Domain checks the entire top level domain, so there will be a match here.

3.19. If I run a test scrape - everything works, if I run a regular one - I get a Some error.

The issue is likely with DNS; try following this DNS configuration instruction.

3.20. Where is the Result Format set?

3.21. The  SE::Google is missing Dutch, even though it's available in Google settings. Why?

SE::Google is missing Dutch, even though it's available in Google settings. Why?

Dutch is the language, and it is in the list. Details in the improvement about adding Dutch.

4. Questions about scraping and errors during scraping

4.1. What are threads?

All modern processors can execute tasks in multiple threads, which significantly increases the speed of execution. To illustrate, imagine a regular bus that carries a certain number of people in a unit of time - this would be single-threaded processing, and a double-decker bus that carries twice as many people in the same time - this would be multi-threaded processing. A-Parser can handle up to 10000 threads simultaneously.

4.2. Task won't start - says Some Error - why?

Check the IP address in your Personal Account.

4.3. All queries go to failed, what should I do?

Most likely, the task is incorrectly configured or an invalid query format is used. Also, check if the proxies are live. You can also try increasing the Request retries option (more details here: failed requests).

4.4. How many accounts do I need to register to scrape 1,000,000 keywords from  SE::Yandex::Wordstat?

SE::Yandex::Wordstat?

It is impossible to say exactly how many accounts are needed, as an account can stop being valid after an unknown number of requests. But you can always register new accounts using the  SE::Yandex::Register scraper, or simply add existing accounts to the files/SE-Yandex/accounts.txt file.

SE::Yandex::Register scraper, or simply add existing accounts to the files/SE-Yandex/accounts.txt file.

4.5. A task is not starting, it says Error: Lock 100 threads failed (20 of limit 100 used) what should I do?

You need to increase the maximum available number of threads in the scraper settings, or reduce it in the task settings. Details in Settings.

4.6. Can I run 2 tasks simultaneously?

Yes, A-Parser supports executing multiple tasks simultaneously. The number of concurrently running tasks is regulated in Settings - General Settings: Maximum active tasks.

4.7. Where is the results file located?

On the Task Queue, tab, after each task is finished, you can download the results of the work. Physically they are located in the results folder.

4.8. Can I download the results file if scraping is not finished?

No, you cannot download the results until the scraping is finished. But you can copy it from the aparser/results folder when the task is stopped or paused.

4.9. Can your scraper scrape 1,000,000 links per query?

Yes, by using the option Parse all results.

4.10. Can I scrape  Rank::CMS,

Rank::CMS,  Net::Whois without proxies?

Net::Whois without proxies?

Rank::CMS - you can, and even should.

Rank::CMS - you can, and even should.  Net::Whois - is not recommended.

Net::Whois - is not recommended.4.11. How to scrape links from Google?

You need to use  SE::Google.

SE::Google.

4.12. Can the scraper follow links?

Yes, the  HTML::LinkExtractor scraper can do this using the option Parse to level

HTML::LinkExtractor scraper can do this using the option Parse to level

4.13. Google scraping is very slow, what to do?

First, you need to check the task logs, as all queries might be failing. If so, you need to find the reason why the queries are failing and fix it. When scraping with  SE::Google in the task logs, failed attempts are often related to Google showing captchas, which is normal. You can connect Anti-Captcha to bypass captchas so that the scraper doesn't try repeatedly.

There is also an article describing the factors that affect scraping speed and how they affect it: speed and principle of scraper operation.

SE::Google in the task logs, failed attempts are often related to Google showing captchas, which is normal. You can connect Anti-Captcha to bypass captchas so that the scraper doesn't try repeatedly.

There is also an article describing the factors that affect scraping speed and how they affect it: speed and principle of scraper operation.

4.14. Can your scraper scrape links where the text is only in Japanese?

Yes, for this you need to set the required language in the scraper settings and use Japanese keywords.

4.15. Can your scraper scrape links only in the .de or .ru domain zone?

Yes. You need to use the filter for this.

4.16. How to get each result on a new line in the file?

When formatting the result, use \\n. Example:

$serp.format('$link\n')

4.17. How to scrape the top 10 websites from Google?

Here is the preset:

eyJwcmVzZXQiOiJUT1AxMCIsInZhbHVlIjp7InByZXNldCI6IlRPUDEwIiwicGFy

c2VycyI6W1siU0U6Okdvb2dsZSIsImRlZmF1bHQiLHsidHlwZSI6Im92ZXJyaWRl

IiwiaWQiOiJwYWdlY291bnQiLCJ2YWx1ZSI6MX0seyJ0eXBlIjoib3ZlcnJpZGUi

LCJpZCI6ImxpbmtzcGVycGFnZSIsInZhbHVlIjoxMH0seyJ0eXBlIjoib3ZlcnJp

ZGUiLCJpZCI6InVzZXByb3h5IiwidmFsdWUiOmZhbHNlfV1dLCJyZXN1bHRzRm9y

bWF0IjoiJHAxLnByZXNldCIsInJlc3VsdHNTYXZlVG8iOiJmaWxlIiwicmVzdWx0

c0ZpbGVOYW1lIjoiJGRhdGVmaWxlLmZvcm1hdCgpLnR4dCIsImFkZGl0aW9uYWxG

b3JtYXRzIjpbXSwicmVzdWx0c1VuaXF1ZSI6Im5vIiwicXVlcnlGb3JtYXQiOlsi

JHF1ZXJ5Il0sInVuaXF1ZVF1ZXJpZXMiOmZhbHNlLCJzYXZlRmFpbGVkUXVlcmll

cyI6ZmFsc2UsIml0ZXJhdG9yT3B0aW9ucyI6eyJvbkFsbExldmVscyI6ZmFsc2Us

InF1ZXJ5QnVpbGRlcnNBZnRlckl0ZXJhdG9yIjpmYWxzZX0sInJlc3VsdHNPcHRp

b25zIjp7Im92ZXJ3cml0ZSI6ZmFsc2V9LCJkb0xvZyI6Im5vIiwia2VlcFVuaXF1

ZSI6Ik5vIiwibW9yZU9wdGlvbnMiOmZhbHNlLCJyZXN1bHRzUHJlcGVuZCI6IiIs

InJlc3VsdHNBcHBlbmQiOiIiLCJxdWVyeUJ1aWxkZXJzIjpbXSwicmVzdWx0c0J1

aWxkZXJzIjpbXSwiY29uZmlnT3ZlcnJpZGVzIjpbXX19

4.18. I add a task, go to the Task Queue tab - but it's not there! Why?

Either there is an error in the task compilation, or it is already completed and has moved to Completed.

4.19. It says the file is not in utf-8, but I didn't change it, and it already is utf-8, what should I do?

Check again. Also, try changing the encoding anyway, for example, using Notepad++.

4.20. In the results file, everything is on one line, even though I set a newline in the task - why?

In A-Parser additional settings, you need to use the CRLF (Windows) line break.

But if you have already scraped without this option, use a more advanced viewer, such as Notepad,++.

4.21. How long does it take to check query frequency on Yandex for 1,000 queries?

This indicator highly depends on the task parameters, server characteristics, proxy quality, etc., so it is impossible to give a definite answer.

4.22. How do I configure the scraper so that the result is query-link?

Result Format:

$p1.serp.format('$query: $link\n')

The result will be:

query: link 1

query: link 2

query: link 3

4.23. How do I re-scrape failed queries and where are they stored?

To save failed queries, you should select the corresponding option in the Queries block in the Task Editor. Failed queries are stored in queries\failed. You need to create a new task and specify the file with failed queries as the query file.

4.24. How to get rid of HTML tags when scraping text?

Use the Remove HTML tags option in the Results Builder.

4.25. How to scrape only domains?

Use the Extract Domain option in the Results Builder.

4.26. What is the maximum size of the query file that can be used in the scraper?

The sizes of query and result files are not limited and can reach terabyte values.

4.27. Why, when I enter text in the query field, does the scraper output Queries length limited to 8192 characters?

This is because the query length is limited to 8192 characters. To use longer queries, use files as queries.

4.28. What does Pending threads - 3 mean?

This means there are not enough proxies. Reduce the number of threads or increase the number of proxies.

4.29. In the test scrape, it says 596 SOCKS proxy error: Hello read error(Connection reset by peer) (0 KB) and does not scrape, why?

This indicates non-working proxies.

4.30. What is the difference between result language and search country in the Google scraper?

The difference is as follows: the search country is the binding of results to a specific country. For example, if you search for buy windows with a specific country binding, websites offering to buy windows specifically in that country will have priority. And the result language is the language in which the results should be issued.

4.31. A specific website is not being scraped for me. What could be wrong?

The problem is often that blocking occurs due to an old user agent on the server side. This is solved with a new user agent or the following code in the User agent parameter:

[% tools.ua.random() %]

4.32. The scraper hangs, crashes. The log contains the line syswrite: No space left on device

A-Parser is running out of hard disk space. Free up more space.

4.33. My scraper has started outputting none in the results (or an obviously incorrect result)

4.34. A window with the notice Failed fetch news constantly appears

4.35. How to output the first n results of a search engine?

4.36. How to track the redirect chain?

4.37. How to check the indexation of a link on the donor website?

There is a separate scraper for such purposes:  Check::BackLink.

More details in the discussion.

Check::BackLink.

More details in the discussion.

4.38. The scraper crashes on Linux. The log contains the entry: EV: error in callback (ignoring): syswrite() on closed filehandle at AnyEvent/Handle.pm line...

Most likely, you need to tune the number of threads, as described in the Documentation: Tuning Linux for more threads.

4.39. Where can I see all possible parameters for use via API?

Getting an API request in the interface.

Also, you can generate a full task config in JSON. To do this, you need to take the task code and decode it from base64.

4.40. I download images using  Net::HTTP, but they are all corrupted for some reason. What should I do?

Net::HTTP, but they are all corrupted for some reason. What should I do?

1) Check the Max body size parameter - you may need to increase it. 2) Check the line break format in A-Parser settings: Additional Settings - Line Break.

For the image not to be corrupted, the UNIX format must be used.

4.41. How to get admin contact from WHOIS?

This task is easily solved using the Parse custom result function and a regular expression. Details in the discussion.

4.42. Regular expression for scraping phone numbers

4.43. Detecting websites without a mobile version

4.44. How to find out the ns-server name?

4.45. How to scrape links to Yandex cache?

4.46. How to scrape links to all pages of a website?

4.47. How to scrape the title from a page?

4.48. How to scrape all websites in a given domain zone?

4.49. How to collect all URLs with parameters?

4.50. How to filter results by several criteria and divide them by these in the report?

4.51. How to simplify the filter construction?

4.52. How to sort by files depending on the result?

4.53. Create new result directory every X number of files (English)

4.54. First steps with WordStat

4.55. Collecting text blocks >1000 characters

4.56. Outputting a certain amount of text from a page

This is also solved using Template Toolkit. More details in the discussion.

4.57. Checking competition and title inclusion in Google

4.58. Filtering by the number of query occurrences in anchor and snippet

4.59. How to get the content of an article in one line?

4.60. How to compare two string dates?

4.61. How to scrape highlighted words from a snippet?

4.62. Example task using multiple scrapers

4.63. How to shuffle lines in the result and how to output a random number of results?

4.64. How to sign the result using MD5?

4.65. How to convert date from Unix timestamp to string representation?

4.66. Parse to level, how to scrape with a limit?

4.67. The scraper crashes on Linux when starting a task. The log has entries like: Can't call method "if_list" on an undefined value at IO/Interface/Simple.pm...

You need to execute the command in the console:

apt-get --reinstall --purge install netbase

4.68. Error Cannot init Parser: Error: Failed to launch the browser process! [0429/082706.472999:ERROR:zygote_host_impl_linux.cc(90)] Running as root without --no-sandbox is not supported...

You should run A-Parser not as root. Specifically: from the root user, you need to create a new user without root privileges (if there is one, just use it) and then allow that user to interact with the A-Parser directory, then you need to log in as the new user and run it from there.

As user root create a user, you can follow this guide.

To allow the created user to interact with the A-Parser directory, you need to grant the user permissions. To do this, log in as the root user and grant permissions with the command:

chown -R user:user aparser

4.69. Error Cannot init Parser: Error: Failed to launch the browser process! [0429/102002.619437:FATAL:zygote_host_impl_linux.cc(117)] No usable sandbox! Update your kernel or see...

Execute the command as user root:

sysctl -w kernel.unprivileged_userns_clone=1

A-Parser restart is not required.

For CentOS 7, the solution is in this thread.

Execute the command as root user:

echo "user.max_user_namespaces=15000" >> /etc/sysctl.conf

Then restart sysctl with the command:

sysctl -p

4.70. Error JavaScript execution error(): Error: Failed to launch the browser process! /aparser/dist/nodejs/node_modules/puppeteer/.local-chromium/linux-884014/chrome-linux/chrome: error while loading shared libraries: libatk-1.0.so.0: cannot open shared object file: No such file or directory...

The error occurs due to missing libraries in the OS for Chrome to work.

The list of necessary libraries for Chrome to work can be found in Chrome headless doesn't launch on UNIX.

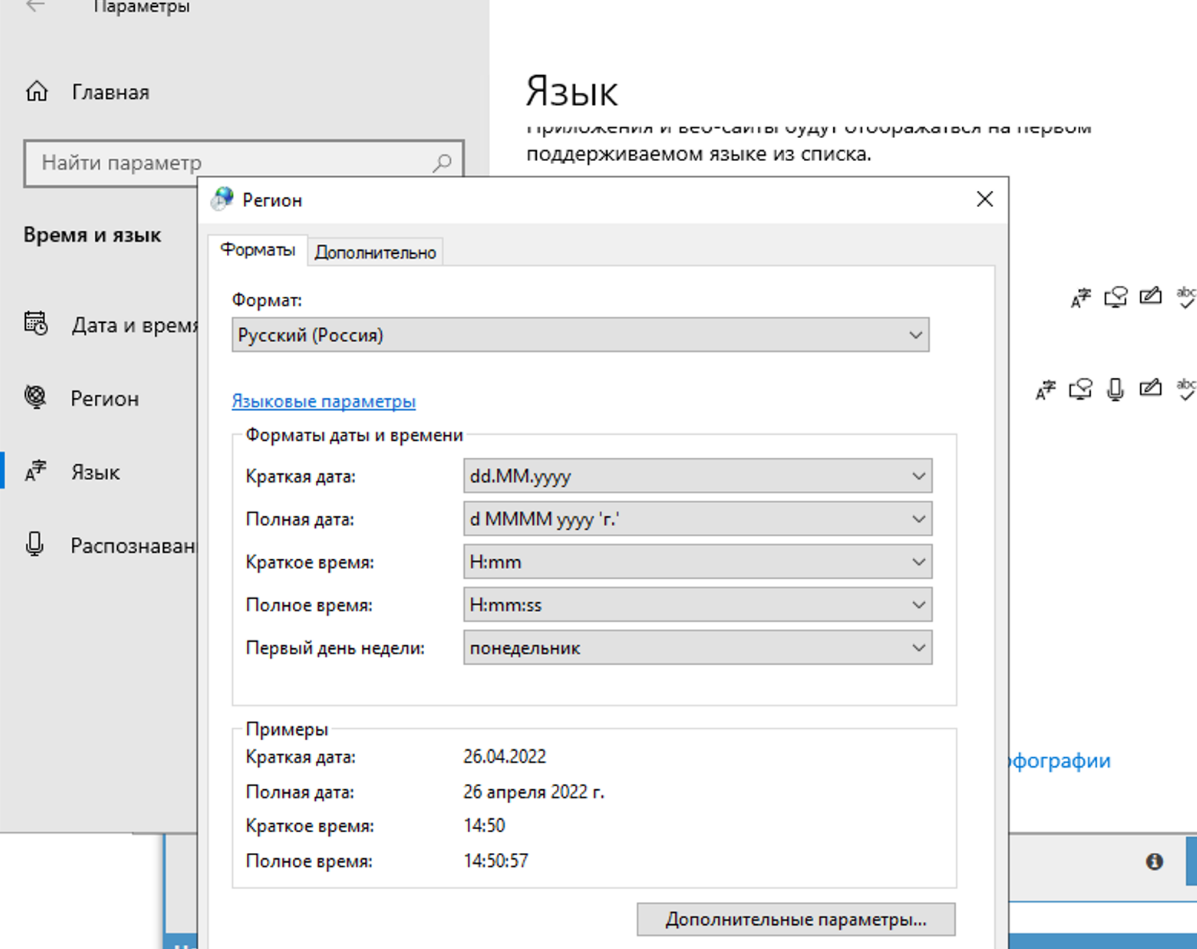

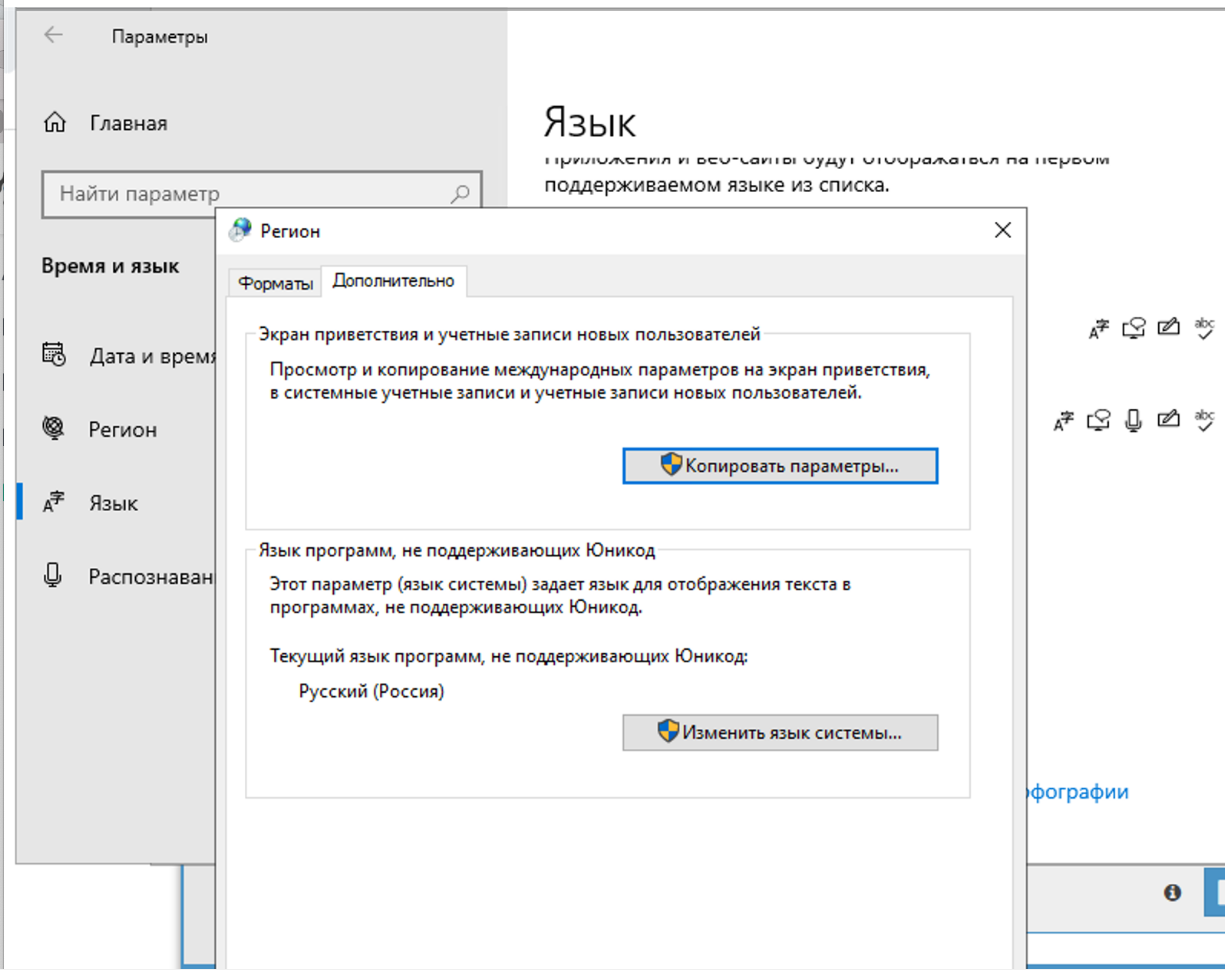

4.71. Why is the captcha not being solved? The log shows that A-Parser received question marks from XEvil instead of the captcha answer

In the region settings, you need to change it to Russian.

Only need to change on the additional tab. This does not affect captcha solving, but there will be a problem with the encoding in XEvil itself if changed in both places.