HTML::LinkExtractor - Scraper for External and Internal Links from a Specified Site

Scraper Overview

HTML::LinkExtractor – a scraper for external and internal links from a specified site. It supports multi-page scraping and internal page traversal up to a specified depth, which allows going through all pages of the site, collecting internal and external links. It has built-in means to bypass CloudFlare protection, and also the ability to select Chrome as an engine for scraping emails from pages where data is loaded by scripts. It is capable of speeds up to 2000 queries per minute, which is – links per hour.120 000 links per hour.

HTML::LinkExtractor – a scraper for external and internal links from a specified site. It supports multi-page scraping and internal page traversal up to a specified depth, which allows going through all pages of the site, collecting internal and external links. It has built-in means to bypass CloudFlare protection, and also the ability to select Chrome as an engine for scraping emails from pages where data is loaded by scripts. It is capable of speeds up to 2000 queries per minute, which is – links per hour.120 000 links per hour.Scraper Use Cases

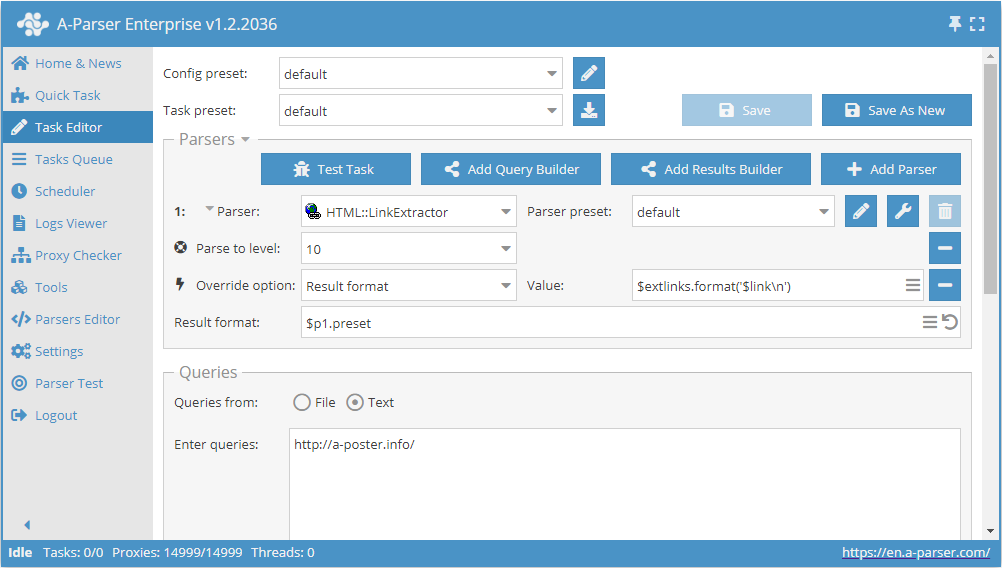

Collecting All External Links from a Site

- Add the option Parse to level, select the value

10((traversal of neighboring pages up to the 10th level). - Add the option Result format, specify the value

$extlinks.format('$link\n')((output external links). - In the Queries section, check the

Unique queriesoption. - In the Results section, check the

Unique by stringoption. - As the query, specify the link to the site from which external links need to be scraped.

Download example

How to import the example into A-Parser

eJxtU01v2zAM/S9CgK5AlrSHXnxLgwZb4dZdm57SHISYztTIoirRWQrD/32U7NjJ

1ptIvsfHL9WCpN/5JwceyItkVQsb3yIRORSy0iTGwkrnwYXwSvxYPqRJkiqzuzuQ

kxtCx4geWwv6tMBstKTQeI6pnM2YIoU9aPbspa4Yc33VnOD34JzK4Ugo0JWSuJa2

hI4iRnAgzeJ+0gK+XYyC+fZmLi5Fs16PRUvxixgODHs96Xrqgy9yD0sMKkrD4F6w

9SjLqJNLghA96lxO6BAyyDxXoTOpW4UwlUH11aiPWKcnp8yW8Ww6BX7hsGQ3QUwS

nJ/HCldiFG3BaarI/9VyREKugrHwXO1Cci15Hyik9hxRBE7yBrJu2Ekt0My0joMe

YDH9baV0zlucFUz62RG/hmT/5Wj6Dk+leGV/HNfQZ4nWbfYwsHJMccuNG+S2tSoV

se3nWJmwmyt27gBsP7bHACvRQS/TZe7U+VAtmHAfw9ZmdnCdtXG2mXPnBk2htll3

c0dkZZb8GzIzx9JqCH2ZSmveiofn4UJmvltDMIYC/yXPo8TZPyJE7e9f2lKtU3yB

N6HAkid5qtql3EitX5/T04gYLoqN30Q2mU7ld4ueFzpRpsCpCESCLfJFcVvNuv+/

/S+vv/zFSd3wwt79U4sO3QUs+3hMnrfBP7b5C6wbebo=

tip

Collecting All Internal Links from a Site

Similar to the first case, but in step 2, the value to specify is $intlinks.format('$link\n') ((output internal links).

Download example

How to import the example into A-Parser

eJxtU8tu2zAQ/BfCQBrAtZNDL7o5Roy2cOI0j5PjA2GtXNYUyZIrN4Ggf++QkiW7

zY27O7OzL9aCZdiHB0+BOIhsXQuX3iITORWy0izGwkkfyMfwWnx9vltm2VKZ/e0b

e7ll64HosbXgd0dgW8fKmoCYymGmFEs6kIbnIHUFzPVVc4I/kPcqpyOhsL6UjFra

EjqKGCnDGuJh0gI+XYyi+fpqLi5Fs9mMRUsJixSODHc96Xrqg0/yQM82qihNg3sB

616WSSeXTDF61Lmc8FvMIPNcxc6kbhXiVAbVF6N+pzoDe2V2wMP0isLC2xJuppQk

Ot+PFa7FKNkCaarE/9FyRMa+orEIqHYhUUveBwqpAyKKyUtsYNUNO6uFNTOt06AH

WEp/UymdY4uzAqRvHfFjyOq/HE3f4akUVvbHo4Y+S7JuVncDK7dLu0PjxqJtrUrF

sMPcVibu5grOPZHrx3YfYaX11Mt0mTt1HKojE+9j2NrMDa6zNs42c+7cWlOo3aq7

uSOyMs/4DSszt6XTFPsyldbYSqDH4UJmoVtDNIYC/yXPk8TZP2Jrdfj+1JbqvMIF

fokFlpjkqWqXciu1fnlcnkbEcFEwfjK7bDqVn50NWOhEmcJORSQy7SwuCm01m/7/

9r+8/vAXZ3WDhf0KDy06dhex8GFMAdvAj23+ApcrebQ=

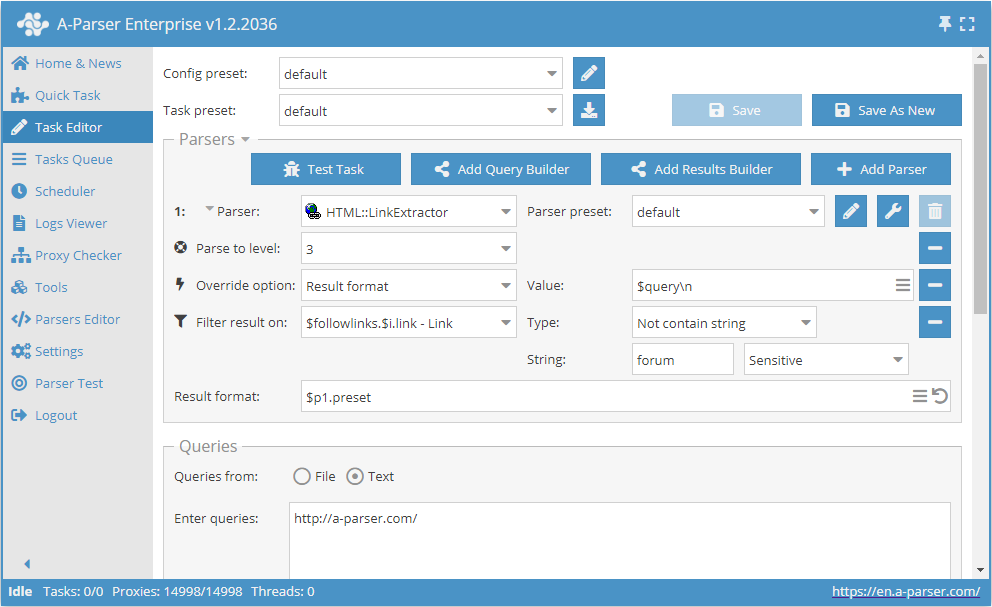

Follow only links that do not contain the word forum

- Add the option Parse to level, select the value

3((traversal of neighboring pages up to the 3rd level). - Add the option Result format, specify the value

$query. - Add a filter. Filter by

$followlinks.$i.link - Link, select typeDoes not contain string, specifyforum. - In the Queries section, check the

Unique queriesoption. - In the Results section, check the

Unique by stringoption. - As the query, specify the link to the site from which links need to be scraped.

Download example

How to import the example into A-Parser

eJxtVE1v2zAM/S/CDhuQJS2GXXxLgwbd4DZdm57SHISYzrTIkipRaQvD/33UR2xn

6ykh+R75+CG3DLk7uHsLDtCxYtMyE/+zglVQcy+RTZjh1oEN4Q27Wd+WRVEKdbh+

Q8t3qC0hemzL8N0AsbVBoZWjmKjIjClKOIIkz5FLT5hv3Qh+BGtFBSd8rW3DkaQk

BZnBPr14sO/Pz4qNuLWQCEFFhhcbokupXyWpDArCL9tOMnCdWErjTivkQo3yU1nf

kJ3Uk8MB9dBtt6fkbhmFBSnmcppn1Qcf+RHWOkmCwb0k6443sYGKI4ToNHX4+csU

30IGXlUi1OQyVQjTHqo+KfESBTq0Qu0JHwYhwC2tbsiNEJPE6ZwUbvK0Quc+8n8l

DivQepgwR2qXnLRUfaDm0lFE0Jg4bXaVl1i0TKu5lHGBAyymv/JCVnQd85pIPzLx

Y8jqvxxd3+G4FN3CqyUNfZZoXa1uB1alS72PW4z7bQSS7Rbaq7CbC3IeAEw/trsA

a7SFvkzOnKvTAzCgwuENW5ubwXXWxtlmzp10UbXYr/Ixn5BeremVrdRCN0ZC6Et5

KWkrDh6GC5m7vIZgDAL/JS9iibP3iVpL9/MxSTVW0AV+DwIbmuS4ak6541I+PZTj

CBsuiozfiKaYzfjX9PCnO93MWOAh7DUdFHXVbfvPQv/xaD/8OBRtR/v64+4TOjQX

sOSjKbn4yi67v8azl7c=

tip

Collected Data

- Count of external links

- Count of internal links

- External links:

- links themselves

- anchors

- anchors cleaned of HTML tags

- nofollow parameter

- the complete

<a>tag

- Internal links:

- links themselves

- anchors

- anchors cleaned of HTML tags

- nofollow parameter

- the complete

<a>tag

- Array with all collected pages (used when the Use Pages option is enabled)

Capabilities

- Multi-page parsing (page navigation)

- Traversing internal pages of the site up to a specified depth (Parse to level option) – allows going through all pages of the site, collecting internal and external links

- Limit on page traversals (Follow links limit option)

- Automatically cleans anchors from HTML tags

- Determines nofollow for each link

- Ability to specify subdomains to be treated as internal site pages

- Supports gzip/deflate/brotli compression

- Determination and conversion of site encodings to UTF-8

- CloudFlare protection bypass

- Engine selection (HTTP or Chrome)

Use Cases

- Obtaining a full site map (saving all internal links)

- Obtaining all external links from a site

- Checking for a backlink to one's own site

Queries

As queries, you must specify links to the pages from which links need to be collected, or an entry point (e.g., the site's main page), when the option Parse to level:

https://lenta.ru/

https://a-parser.com/wiki/index/

Output Results Examples

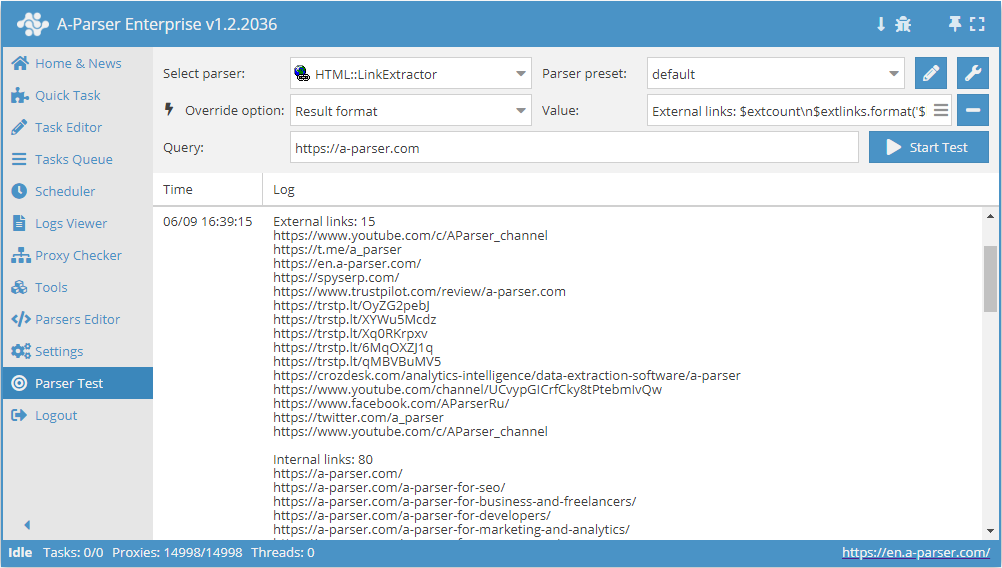

A-Parser supports flexible result formatting thanks to the built-in templating engine Template Toolkit, which allows it to output results in an arbitrary form, as well as in a structured form, such as CSV or JSON

Output of External and Internal Links with their Count

Result format:

External links: $extcount\n$extlinks.format('$link\n')

Internal links: $intcount\n$intlinks.format('$link\n')

Result example:

External links: 12

https://www.youtube.com/c/AParser_channel

https://t.me/a_parser

https://en.a-parser.com/

https://spyserp.com/ru/

https://sitechecker.pro/

https://arsenkin.ru/tools/

https://spyserp.com/

http://www.promkaskad.ru/

https://www.youtube.com/channel/UCvypGICrfCky8tPtebmIvQw

https://www.facebook.com/AParserRu

https://twitter.com/a_parser

https://www.youtube.com/c/AParser_channel

Internal links: 129

https://a-parser.com/

https://a-parser.com/

https://a-parser.com/a-parser-for-seo/

https://a-parser.com/a-parser-for-business-and-freelancers/

https://a-parser.com/a-parser-for-developers/

https://a-parser.com/a-parser-for-marketing-and-analytics/

https://a-parser.com/a-parser-for-e-commerce/

https://a-parser.com/a-parser-for-cpa/

https://a-parser.com/wiki/features-and-benefits/

https://a-parser.com/wiki/parsers/

Possible Settings

| Parameter Name | Default Value | Description |

|---|---|---|

| Good status | All | Select which server response is considered successful. If the scraper receives a different response from the server, the query will be repeated with a different proxy |

| Good code RegEx | Ability to specify a regular expression to check the response code | |

| Ban Proxy Code RegEx | Ability to ban proxies temporarily (Proxy ban time) based on the server response code | |

| Method | GET | Request method |

| POST body | Content to transmit to the server when using the POST method. Supports variables $query – request URL, $query.orig – original query, and $pagenum - page number when using the Use Pages option. | |

| Cookies | Ability to specify cookies for the request. | |

| User agent | _Automatically substitutes user-agent of the actual Chrome version_ | User-Agent header for page requests |

| Additional headers | Ability to specify arbitrary request headers with support for templating capabilities and using variables from the query constructor | |

| Read only headers | ☐ | Read only headers. In some cases, allows saving traffic if processing content is not necessary |

| Detect charset on content | ☐ | Recognize encoding based on page content |

| Emulate browser headers | ☐ | Emulate browser headers |

| Max redirects count | 0 | Maximum number of redirects the scraper will follow |

| Follow common redirects | ☑ | Allows http <-> https and www.domain <-> domain redirects within the same domain, bypassing the Max redirects count limit |

| Max cookies count | 16 | Maximum number of cookies to save |

| Engine | HTTP (Fast, JavaScript Disabled) | Allows selecting the HTTP (faster, no JavaScript) or Chrome (slower, JavaScript enabled) engine |

| Chrome Headless | ☐ | If enabled, the browser will not be displayed |

| Chrome DevTools | ☑ | Allows using tools for Chromium debugging |

| Chrome Log Proxy connections | ☑ | If enabled, information on chrome connections will be output to the log |

| Chrome Wait Until | networkidle2 | Determines when a page is considered loaded. More about values. |

| Use HTTP/2 transport | ☐ | Determines whether to use HTTP/2 instead of HTTP/1.1. For example, Google and Majestic immediately ban if HTTP/1.1 is used. |

| Don't verify TLS certs | ☐ | Disable TLS certificate validation |

| Randomize TLS Fingerprint | ☐ | This option allows bypassing site bans based on TLS fingerprint |

| Bypass CloudFlare | ☑ | Automatic bypass of CloudFlare check |

| Bypass CloudFlare with Chrome(Experimental) | ☐ | CloudFlare bypass using Chrome |

| Bypass CloudFlare with Chrome Max Pages | 20 | Max number of pages when bypassing CF via Chrome |

| Subdomains are internal | ☐ | Whether to consider subdomains as internal links |

| Follow links | Internal only | Which links to follow |

| Follow links limit | 0 | Follow links limit, applied to each unique domain |

| Skip comment blocks | ☐ | Whether to skip comment blocks |