HTML::ArticleExtractor - Article Scraper

Overview of the scraper

HTML::ArticleExtractor collects articles from web pages.

HTML::ArticleExtractor collects articles from web pages.Operates using the @mozilla/readability module, which is built into A-Parser and collects basic data such as: title, content with and without HTML markup, and article length.

It is based on the  Net::HTTP, scraper, which allows it to maintain its functionality. It supports multi-page parsing (page navigation). It has built-in means for bypassing CloudFlare protection, as well as the ability to select the Chrome as the engine for scraping articles from pages where data is loaded by scripts.

Net::HTTP, scraper, which allows it to maintain its functionality. It supports multi-page parsing (page navigation). It has built-in means for bypassing CloudFlare protection, as well as the ability to select the Chrome as the engine for scraping articles from pages where data is loaded by scripts.

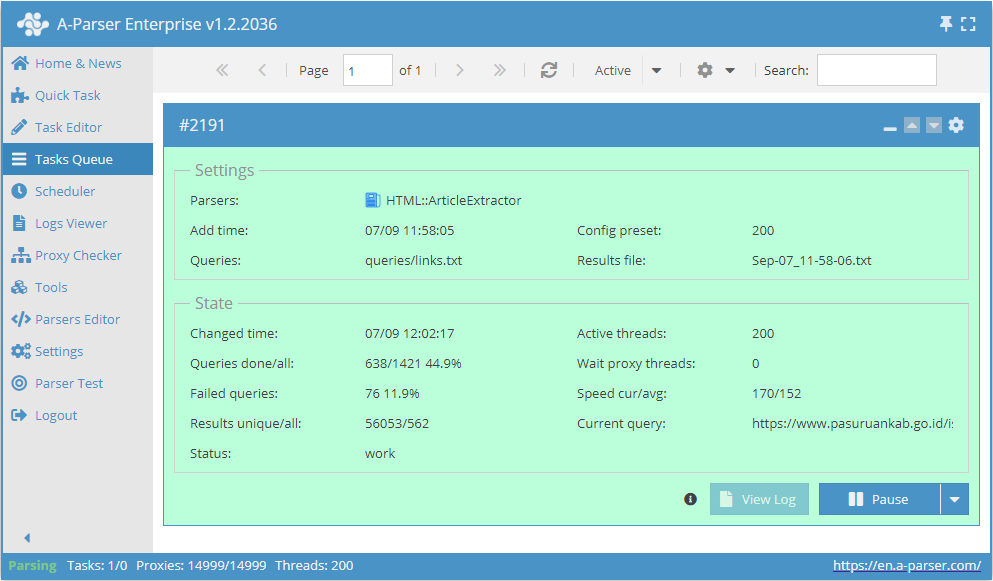

Capable of reaching speeds up to 200 requests per minute, – which is 12 000 links per hour.

Collected data

- Article title -

$title - HTML string of the processed article content -

$content - Textual content of the article (all HTML removed) -

$textContent - Article length in characters -

$length - Article description or short excerpt of the content -

$excerpt - Author metadata -

$byline - Site name -

$siteName

Capabilities

- Multi-page parsing (page navigation)

- Supports gzip/deflate/brotli compression

- Detection and conversion of site encodings to UTF-8

- CloudFlare bypass

- Selection of the engine (HTTP or Chrome)

- Ability to set article length

- Scraping of articles with and without HTML tags

Use cases

- Collecting ready-made articles from any site

Queries

For queries, you must specify links to the pages from which articles need to be scraped, for example:

https://a-parser.com/docs/

https://lenta.ru/articles/2021/09/11/buran/

https://www.thetimes.co.uk/article/the-russian-banker-the-royal-fixers-and-a-500-000-riddle-vvgc55b2s

Output results examples

A-Parser supports flexible result formatting thanks to the built-in templating engine Template Toolkit, which allows it to output results in an arbitrary form, as well as in a structured format, such as CSV or JSON

Possible settings

General settings for all scrapers

It supports all settings of the scraper Net::HTTP.

Net::HTTP.