SE::Google::Compromised - Checking for the inscription This site may be hacked in Google

Overview of the scraper

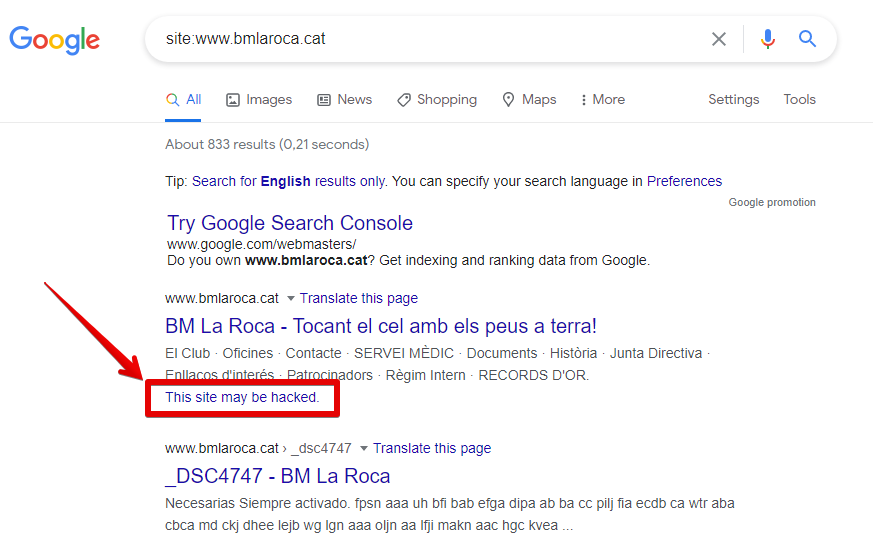

The Google Compromised scraper allows you to check for the presence of the inscription This site may be hacked in Google's search results. Using the Google Compromised scraper, you can check your own domain databases for the inscription. You can find more details about this inscription in the Google Search Help Center.

A-Parser's functionality allows you to save parsing settings for future use (presets), set a parsing schedule, and much more.

Results can be saved in the form and structure you need, thanks to the built-in powerful template engine Template Toolkit which allows you to apply additional logic to the results and output data in various formats, including JSON, SQL and CSV.

Collected data

- Checking for the presence of the inscription This site may be hacked in Google

Capabilities

- Supports all capabilities of the

SE::Google.

SE::Google.

Use cases

- Checking a list of domains for the presence of the inscription This site may be hacked in Google

- Monitoring your own domains

Queries

As queries, you must specify the URL of the site being searched, for example:

http://a-parser.com/

http://www.yandex.ru/

http://google.com/

http://russbehnke.com/

http://www.bmlaroca.cat/

http://vk.com/

http://facebook.com/

http://youtube.com/

Query substitutions

You can use built-in macros for automatic substitution of subqueries from files, for example, we want to check sites/a site against a keyword database, we will specify several main queries:

ria.ru

lenta.ru

rbc.ru

yandex.ru

In the query format, we specify a macro for substituting additional words from the file Keywords.txt, this method allows you to check a database of sites against a database of keywords and get positions as a result:

$query {subs:Keywords}

This macro will create as many additional queries as there are in the file for each initial search query, which in total will give [number of initial queries (domains)] x [number of queries in the Keywords file] = [total number of queries] as a result of the macro execution.

You can also specify the protocol in the query format so that only domains can be used as queries:

http://$query

This format will append http:// to each query.

Output results examples

A-Parser supports flexible result formatting thanks to the built-in template engine Template Toolkit, which allows it to output results in arbitrary form, as well as in a structured form, such as CSV or JSON

Exporting the list of compromise checks

Result format:

$query: $compromised\n

An example of a result showing the URL and the presence of the inscription This site may be hacked in Google:

http://a-parser.com/: 0

http://www.bmlaroca.cat/: 1

http://russbehnke.com/: 0

http://www.yandex.ru/: 0

http://google.com/: 0

Links + anchors + snippets with position output

Outputting links, anchors, and snippets to a CSV table

Saving related keywords

Keyword competition

Checking links indexation

Saving in SQL format

Dumping results to JSON

Results processing

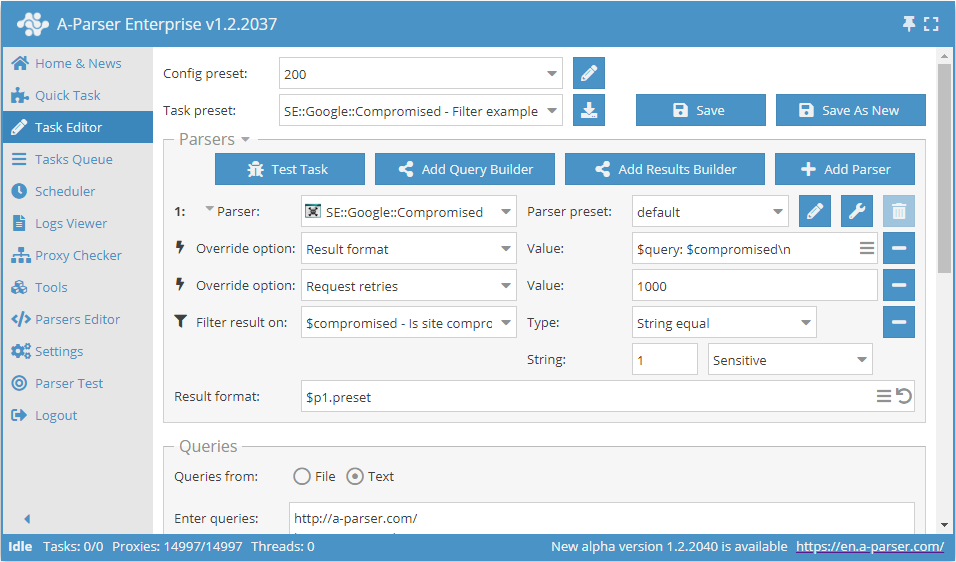

A-Parser allows you to process results directly during scraping; in this section, we have presented the most popular use cases for the SE::Google::Compromised scraper

Saving domains with a check value of "1"

Add a filter and select the check value variable $compromised - Is site compromised from the drop-down list. Select type: String equals. Next, in String you need to enter the value we need 1. With this filter, you can remove all results with an unnecessary value.

Download example

How to import the example into A-Parser

eJx1VE1z2jAQ/SseTQ7tDDFw6MU3woROOzSkITkBB2GtiYosCUnmYzz8965kYxta

btZ+vH37dtclcdRu7asBC86SZFESHb5JQubPSfJdqY2AJBmrXBuVcwsseowmXDgw

ERxprgWQHtHUWDA+f3EvDaMYZLQQjvRK4k4asITagzGceQjO8J0pk1OHBEIY2VNR

+LCHXQHmlEQPaYu3XEpyvo+EYceTAWc42A7ScDAYdNOy0AkG1DUTkl5RrvzvVTDs

Ciq6YPittONK4sOCtOS8Wl2g7CT04tnrYVyL2jjndA/vqiIArRmFhReah54ZdeC9

cSXKl6+xO3oEyhj3NamoKnjV26ofku8COakw1uuGAkywITQ5CABBzAu7RS0uQYgi

5P6uckiSUWGhRyxSnVAkwm49HKWhTplZ0ADtJVFyJMQU9iDasID/VHDBcEVGGSb9

qBP/HzL7B+PctNcthRM/GOTQoITX0+xXm8XUVG2wc7bGvgXPucO3HatC+sEM0LgF

0I1mL16zXBloytTIdXU8Ew3Sr1c7spFuTVdtXI3l2pgqmfHNrF7ZS2Qh3/EWZ9If

jQDflyyEwLFYeGvXY2TrMfhHS/A2eRxK+NYvR0ecUsL+nFdUteG4ft88wRyV7Fat

IVMqxMfbtOsh7Urh49M5nfT79LG6/hgvpx9FS1nbD4dDfKKSwTE2RdexCT+H23BT

WLuGT7mtPFcw61xQo1Iap9T1iafmYKNwaVG486r5/TR/sfLuTygpz7gWf+xrleE1

9PFow2HYcMnD81/p/MfQ

See also: Result Filters

Link deduplication

Link deduplication by domain

Extracting domains

Removing tags from anchors and snippets

Filtering links by inclusion

Possible settings

Supports all settings of the  SE::Google, scraper, as well as additionally:

SE::Google, scraper, as well as additionally:

| Parameter Name | Default Value | Description |

|---|---|---|

| Pages count | 1 | Number of search result pages to scrape (from 1 to 10) |