HTML::TextExtractor::LangDetect - Page Language Detection

Overview of the scraper

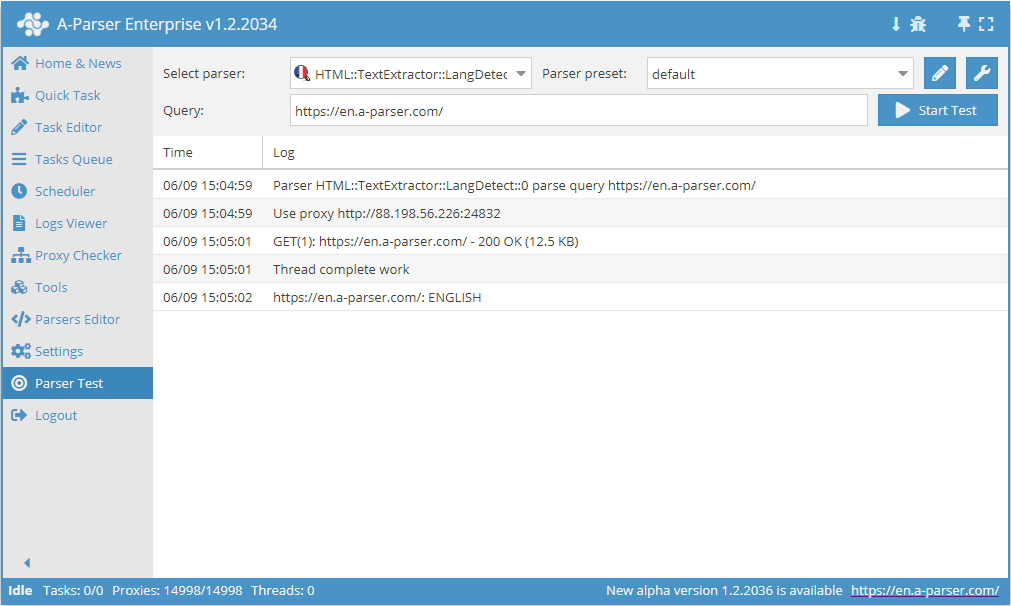

HTML::TextExtractor::LangDetect determines the website language, as well as the detection accuracy in percentage. Supports multi-page parsing and navigation to internal pages of the site up to a specified depth, which allows traversing all site pages, collecting internal and external links. It has built-in means for bypassing CloudFlare protection and also the ability to select Chrome as the engine for scraping emails from pages where data is loaded by scripts. It can reach speeds up to 2000 requests per minute – which is 120 000 links per hour.

HTML::TextExtractor::LangDetect determines the website language, as well as the detection accuracy in percentage. Supports multi-page parsing and navigation to internal pages of the site up to a specified depth, which allows traversing all site pages, collecting internal and external links. It has built-in means for bypassing CloudFlare protection and also the ability to select Chrome as the engine for scraping emails from pages where data is loaded by scripts. It can reach speeds up to 2000 requests per minute – which is 120 000 links per hour.Collected data

- Determines website language

- Determination accuracy in %

Capabilities

- Multi-page parsing (page navigation)

- Supports gzip/deflate/brotli compression

- Detection and conversion of website encodings to UTF-8

- Bypass CloudFlare protection

- Engine selection (HTTP or Chrome)

- Website language detection without using third-party services

- Determination accuracy in %

Use cases

- Selecting domains with a specific content language

Queries

As queries, you need to specify a list of websites, for example:

http://a-parser.com/

http://yandex.ru/

http://google.com/

http://vk.com/

http://facebook.com/

http://youtube.com/

Output results examples

A-Parser supports flexible output formatting thanks to the built-in templating engine Template Toolkit, which allows it to output results in an arbitrary form, as well as in a structured format, such as CSV or JSON

Default output

Result format:

$query: $lang\n

Result example:

http://vk.com/: RUSSIAN

http://a-parser.com/: RUSSIAN

http://yandex.ru/: RUSSIAN

http://youtube.com/: ENGLISH

http://google.com/: ENGLISH

http://facebook.com/: ENGLISH

Possible settings

| Parameter Name | Default Value | Description |

|---|---|---|

| Good status | All | Select which server response is considered successful. If the scraper receives a different response during parsing, the request will be retried with a different proxy. |

| Good code RegEx | Option to specify a regular expression to check the response code. | |

| Method | GET | Request method. |

| POST body | Content to send to the server when using the POST method. Supports variables $query – request URL, $query.orig – original query, and $pagenum - page number when using the Use Pages option. | |

| Cookies | Option to specify cookies for the request. | |

| User agent | Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1) | User-Agent header when requesting pages. |

| Additional headers | Option to specify arbitrary request headers with support for templating features and using variables from the query constructor. | |

| Read only headers | ☐ | Read headers only. In some cases, this saves traffic if content processing is not needed. |

| Detect charset on content | ☐ | Recognize encoding based on page content. |

| Emulate browser headers | ☐ | Emulate browser headers. |

| Max redirects count | 7 | Maximum number of redirects the scraper will follow. |

| Max cookies count | 16 | Maximum number of cookies to save. |

| Bypass CloudFlare | ☑ | Automatic bypass of CloudFlare check. |

| Follow common redirects | ☑ | Allows http <-> https and www.domain <-> domain redirects within the same domain, bypassing the Max redirects count limit. |

| Engine | HTTP (Fast, JavaScript Disabled) | Allows you to choose between the HTTP engine (faster, no JavaScript) or Chrome (slower, JavaScript enabled). |

| Chrome Headless | ☐ | If this option is enabled, the browser will not be displayed. |

| Chrome DevTools | ☑ | Allows the use of Chromium debugging tools. |

| Chrome Log Proxy connections | ☑ | If this option is enabled, information about chrome connections will be output to the log. |

| Chrome Wait Until | networkidle2 | Determines when a page is considered loaded. Learn more about the values. |

| Use HTTP/2 transport | ☐ | Determines whether to use HTTP/2 instead of HTTP/1.1. For example, Google and Majestic immediately ban if HTTP/1.1 is used. |

| Bypass CloudFlare with Chrome(Experimental) | ☐ | CF bypass via Chrome. |

| Bypass CloudFlare with Chrome Max Pages | Max. number of pages when bypassing CF via Chrome. |