Rank::Bukvarix::Keyword - Collecting Keywords by Keyword from Bukvarix

Scraper overview

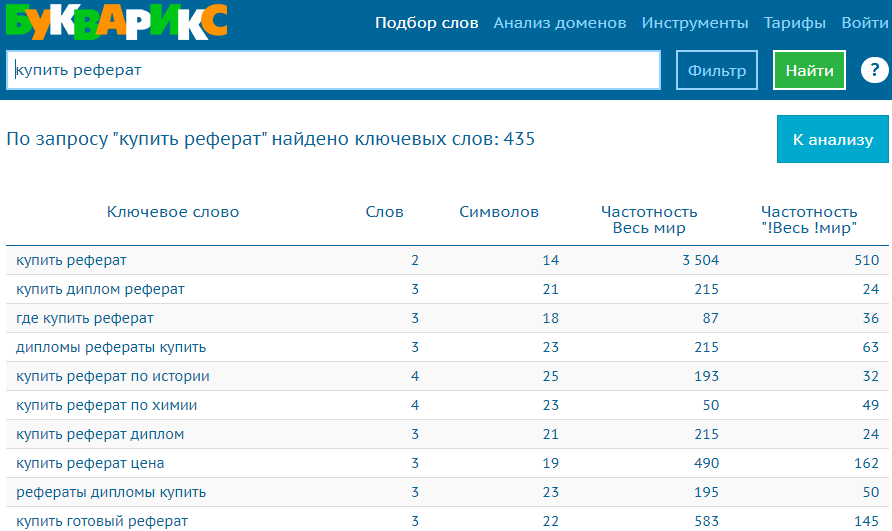

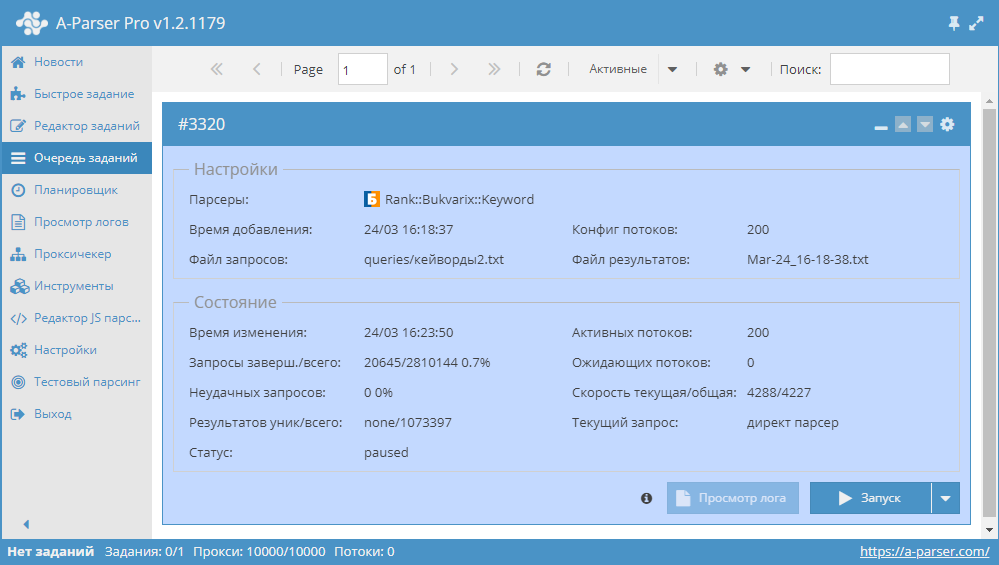

Keyword scraper from Bukvarix. With the Rank::Bukvarix::Keyword scraper, you can automatically collect databases of keywords from bukvarix.com based on a query. Using the Rank::Bukvarix::Keyword scraper, you can quickly and easily scrape keywords from Bukvarix thanks to multi-threaded operation.

Bukvarix word selection solves one of the main SEO tasks, namely fast automated acquisition of an expanded semantic core. Getting keys, number of results in Yandex, frequency, position, and in combination with the Bukvarix keyword scraper by domain -  Rank::Bukvarix::Domain you will get the most complete semantics that will help attract more organic traffic.

Rank::Bukvarix::Domain you will get the most complete semantics that will help attract more organic traffic.

Thanks to the multi-threaded operation of A-Parser, the request processing speed can reach 4 300 requests per minute, which on average allows you to get up to 210 000 non-unique results per minute.

You can use result filtering to clean the result by removing all unnecessary garbage (using negative keywords).

A-Parser functionality allows you to save the parsing settings for the Rank::Bukvarix::Keyword scraper for later use (presets), set up a parsing schedule, and much more.

Results can be saved in the format and structure you need, thanks to the powerful built-in templating engine Template Toolkit which allows you to apply additional logic to the results and output data in various formats, including JSON, SQL and CSV.

Collected data

Data is collected from the service bukvarix.com

- Keyword

- Number of words in the key

- Number of characters

- Frequency (Worldwide)

- Frequency("!Worldwide")

- Number of results in Bukvarix

Capabilities

- Ability to scrape up to 1000 results per query or more with a paid API key

- Option to use a paid API key

Use cases

- Keyword collection

- Identifying the keyword with the highest frequency

API key

Free API key

A-Parser uses the free API key in standard settings.

Paid API key

Possible API key limitations reported by Bukvarix support when issuing the key:

- Number of daily requests

- Number of lines in the report for each request

It is essential to take these limitations into account in A-Parser when using a paid API key.

Additionally, the following rules/restrictions apply when using the API (these apply to all keys):

- Sequential (single-threaded) execution of requests.

- Do not use proxies.

The API key is purchased separately from the Bukvarix subscription.

To use the paid key in A-Parser, you need to use the API-key and Max rows count options, which are described below in Possible settings.

Queries

The queries must be a list of keys, for example:

essay buy

write essay

forex

twitter scraper

scrapers forum

google text scraper

forum scrapers

inurl php id

a-parser

a-parser download

a-parser mass position checker

Query substitutions

You can use built-in macros to multiply queries. For example, if we want to get a very large keyword database, we can specify several main queries in different languages:

essay buy

write essay

forex

twitter scraper

scrapers forum

In the query format, we specify the character iteration from a to zzzz, this method allows for maximum rotation of search results and obtaining many new unique results:

$query {az:a:zzzz}

This macro will create 475254 additional queries for each initial search query, which totals 4 x 475254 = 1901016 search queries. The number is impressive, but it's not a problem for A-Parser. At a speed of 2000 requests per minute, this task will be processed in just 16 hours.

Output results examples

A-Parser supports flexible results formatting thanks to the built-in templating engine Template Toolkit, which allows it to output results in an arbitrary form, as well as in structured form, such as CSV or JSON

Exporting a list of keys

Result format:

$keywords.format('$key\n')

Result example:

write essay

write essay opinion

write written essay

to write essay

write your essay

write in essay

essay opinion write

essay write

write a essay

write to essay

...

Output of keyword, frequency, number of words, and characters

Result format:

$keywords.format('$key, $frequency, $wordscount, $symbolscount\n')

Result example:

write essay, 16552, 2, 11

write essay opinion, 1060, 3, 19

write written essay, 16548, 3, 19

to write essay, 16552, 3, 14

write your essay, 3662, 3, 16

write in essay, 16552, 3, 14

essay opinion write, 1060, 3, 19

essay write, 16552, 2, 11

write a essay, 16552, 3, 13

write to essay, 16552, 3, 14

...

Output of keyword, frequency, number of words, and characters to a CSV table

The built-in utility $tools.CSVLine allows you to create correct tabular documents ready for import into Excel or Google Sheets.

Result format:

[% FOREACH i IN keywords;

tools.CSVline(i.key,i.frequency,i.wordscount, i.symbolscount);

END %]

File name:

$datefile.format().csv

Initial text:

Key,Frequency,Number of words,Number of characters

The Results Format uses the Template Toolkit templating engine to output elements of the $keywords array within a FOREACH loop.

In the results file name, you simply need to change the file extension to csv.

For the "Initial text" option to be available in the Job Editor, you need to activate "More options". In the "Initial text" field, enter the column names separated by commas and make the second line empty.

Saving in SQL format

Result format:

[% FOREACH keywords;

"INSERT INTO serp VALUES('" _ query _ "', '" _ frequency _ "', '" _ wordscount _ "', '" _ symbolscount _ "')\n";

END %]

Result example:

INSERT INTO serp VALUES('write essay', '16552', '2', '11')

INSERT INTO serp VALUES('write essay', '1060', '3', '19')

INSERT INTO serp VALUES('write essay', '16548', '3', '19')

INSERT INTO serp VALUES('write essay', '16552', '3', '14')

INSERT INTO serp VALUES('write essay', '3662', '3', '16')

INSERT INTO serp VALUES('write essay', '16552', '3', '14')

INSERT INTO serp VALUES('write essay', '1060', '3', '19')

INSERT INTO serp VALUES('write essay', '16552', '2', '11')

INSERT INTO serp VALUES('write essay', '16552', '3', '13')

INSERT INTO serp VALUES('write essay', '16552', '3', '14')

...

Dump results to JSON

Общий формат результата:

[% IF notFirst;

",\n";

ELSE;

notFirst = 1;

END;

obj = {};

obj.keywords = [];

FOREACH item IN p1.keywords;

obj.keywords.push(item.key);

END;

obj.json %]

Начальный текст:

[

Конечный текст:

]

Result example:

[{"keywords":["write essay","write essay opinion","write written essay","to write essay","write your essay","write in essay","essay opinion write","essay write","write a essay","write to essay","write the essay","write my essay","how write essay","opinion essay write","essay to write","write an essay","write opinion essay","essay write help","write am essay","personal essay write","essay write me","write fast essay","write essay words","write essay online","write personal essay","online essay write","write essay fast","essay write online","online write essay","write me essay","essay personal write","write short essay","help write essay","best essay write","write essay school","write topics essay","write school essay","essay school write","write essay teacher","write essay topic","write essay plan","essay topics write","school essay write","write essay help","write essay topics","write work essay","topic write essay","write help essay","write best essay","write essay ielts","write essay questions","write essay good","write essay question","good essay write"]}]

For the "Initial text" and "Final text" options to be available in the Job Editor, you need to activate "More options".

Results processing

A-Parser allows processing results directly during scraping, and in this section we have provided the most popular use cases for the Rank::Bukvarix::Keyword scraper

Results deduplication

Same as in Rank::Bukvarix::Domain.

Filtering results (using negative keywords)

Same as in Rank::Bukvarix::Domain.

Possible settings

| Parameter Name | Default Value | Description |

|---|---|---|

| API-key | free | Entering the API key used |

| Max rows count | 1000 | Number of rows in the result for each query |